AI

Management

The Outcome Economy: The Real AI Agent Revolution Everyone Is Missing

News feeds resemble dispatches from the front lines of the technological revolution. It seems not a day goes by without major publications like Forbes or Reuters declaring 2025 the "era of AI agents," promising a total transformation of everything — from our workplaces to the very structure of business. We are painted a picture of a future where autonomous digital entities manage projects, negotiate, and independently build complex workflows. This resembles a new "gold rush": everyone has rushed to "pan for gold," creating their own agents, but few yet understand where the real deposits are and where there is only barren rock.

Behind this deafening media hype lies a more complex and sober reality. When I began to analyze expert opinions, I came across a telling quote from Marina Danilevsky, a senior research scientist at IBM. She rightly notes that the excitement is largely due to rebranding: in her opinion, an "agent" is essentially a new name for the concept of "orchestration," which has existed in programming for decades. In other words, while the media trumpets a revolution, engineers see it more as an evolution — powerful, but still predictable.

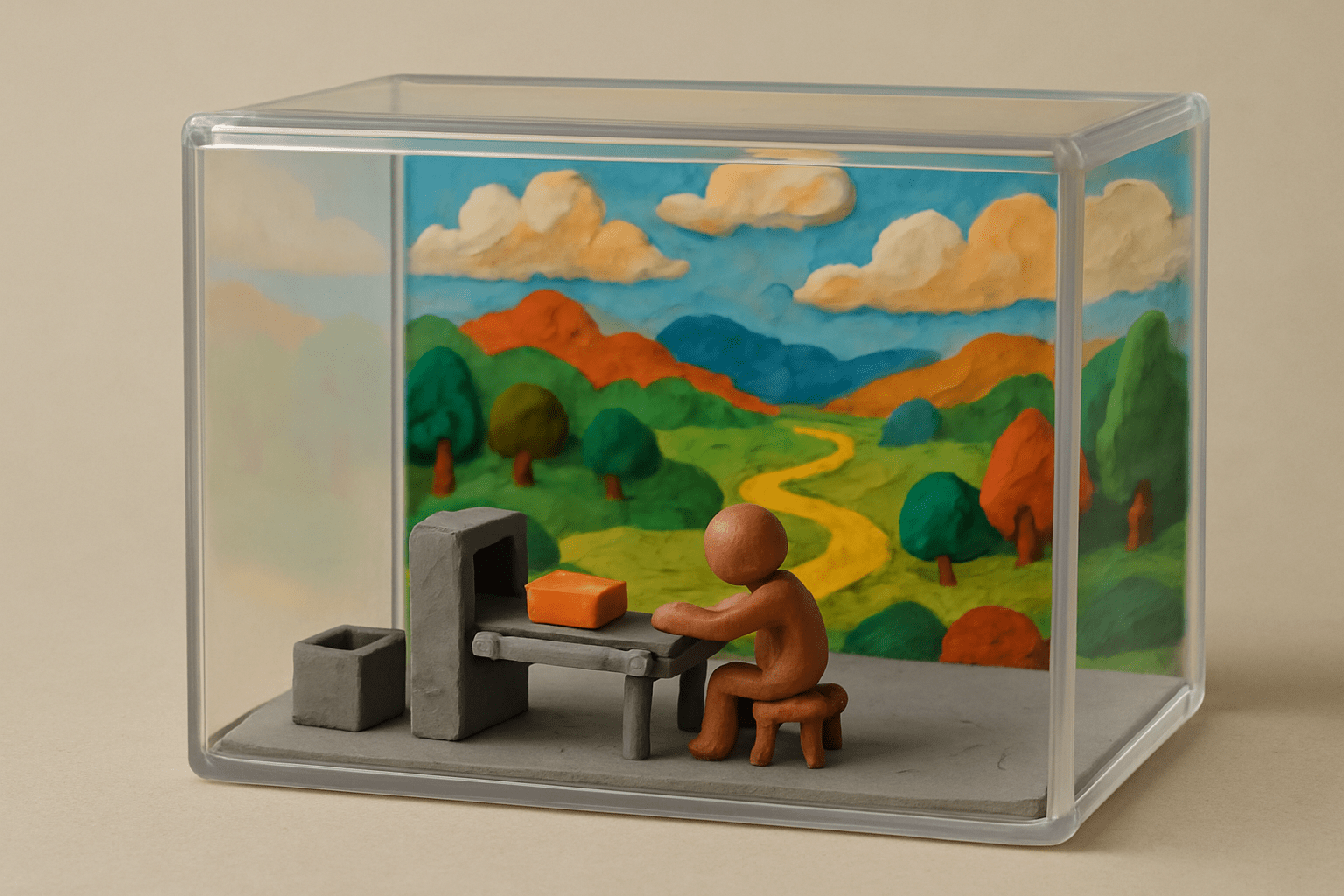

It is in the epicenter of this storm, between market pressure and sober engineering skepticism, that today's technology leaders find themselves. Their dilemma can be illustrated by a short case study, which I compiled from several similar stories.

Case: Anna, CTO of fintech startup FinCore, closes another tab with news about the "AI agent revolution." Her investors are asking about an "agent strategy," and her engineering team is proposing pilots. She feels pressure and FOMO, but her inner voice asks one question: "Is this a real technological shift, or are we just going to build the most expensive calculator in history for automating reports?"

Anna's dilemma is the dilemma of every technology leader today. How to distinguish a fundamental shift from a marketing gimmick? How to invest resources to build a competitive advantage, and not just an expensive toy? To solve this dilemma, a map is needed. This article is such a map, divided into three horizons. Which one are you on?

Setting the Pieces on the Board

Before we unfold our map and embark on a journey through the three horizons, we need to agree on terms. One of the main problems with the current hype is the catastrophic confusion of concepts. In the media, and even in technical discussions, the words "AI agent," "AI assistant," and "bot" are often used interchangeably, which blurs the essence of the discussion and hinders informed decision-making. Yet, the difference between them is not just semantic — it is fundamental.

To vividly demonstrate this, let's use a simple example — booking a table at a restaurant.

- Bot is the most basic level of automation. You ask it: "Find the phone number of restaurant N," and it gives you the number from its database. It acts strictly according to the script embedded in it. This is a worker who performs one specific, predefined operation.

- Assistant (like Siri or Google Assistant) is already smarter. You can say: "Call restaurant N," and it will dial the number. It understands the intention and performs the action, but its role ends there. Further steps — negotiating with the administrator, choosing a time, confirming the reservation — are up to you. This is a master who awaits your specific task.

- Agent is a fundamentally different level. You set it a goal: "Book a table for me and Julia at a good Italian restaurant in the city center for Friday evening." And it begins to act autonomously. It will analyze your preferences, find several suitable restaurants, check for available slots, choose the optimal one, book the table, add the event to your calendar, send Julia an invitation, and possibly even recommend ordering a taxi in advance. This is a foreman who organizes the entire process himself to achieve the final goal, coordinating various tools and services.

This difference in autonomy and proactivity is key. For a system to be called an agent, it must possess a set of fundamental characteristics:

- Autonomy: The ability to act and make decisions independently to achieve a set goal, without constant micromanagement from a human.

- Planning: The ability to break down a complex, multi-stage task into a sequence of concrete steps and arrange them into a logical plan.

- Tool Use: The ability to interact with the external world through APIs, databases, web services, MCP, and other applications. This is what transforms a language model from a "talking head" into a real executor.

- Memory: A critically important ability to retain context, remember the results of past actions, and extract information from experience to make more informed decisions. Architectures like RAG (Retrieval-Augmented Generation) are often used here, so that the agent can rely on verified external knowledge.

- Self-Correction: The ability to analyze its errors, receive feedback (from the system or a human), and adjust its behavior to increase efficiency.

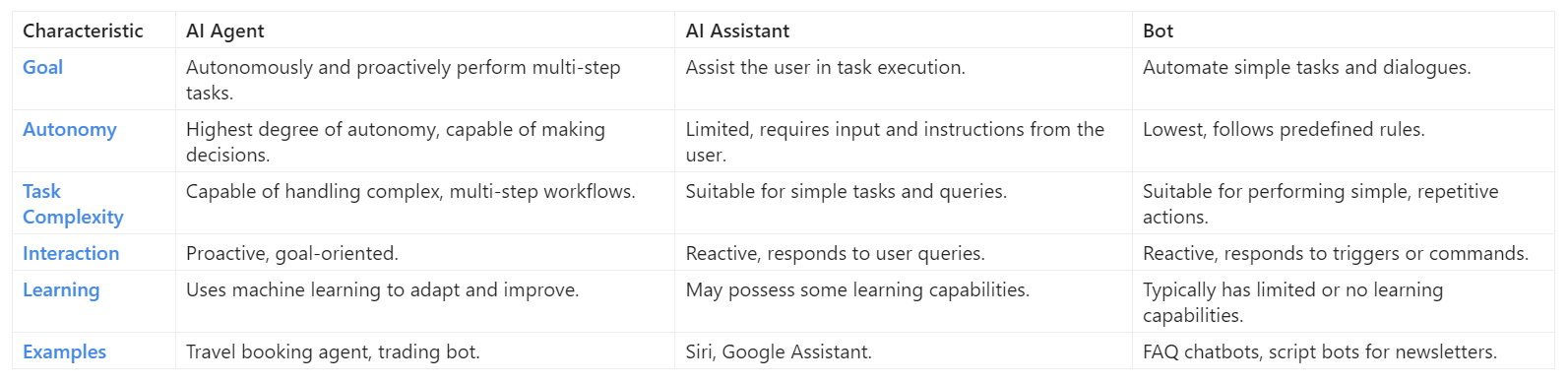

To definitively solidify these distinctions, I have summarized them in a simple table.

The "Three Horizons" Framework

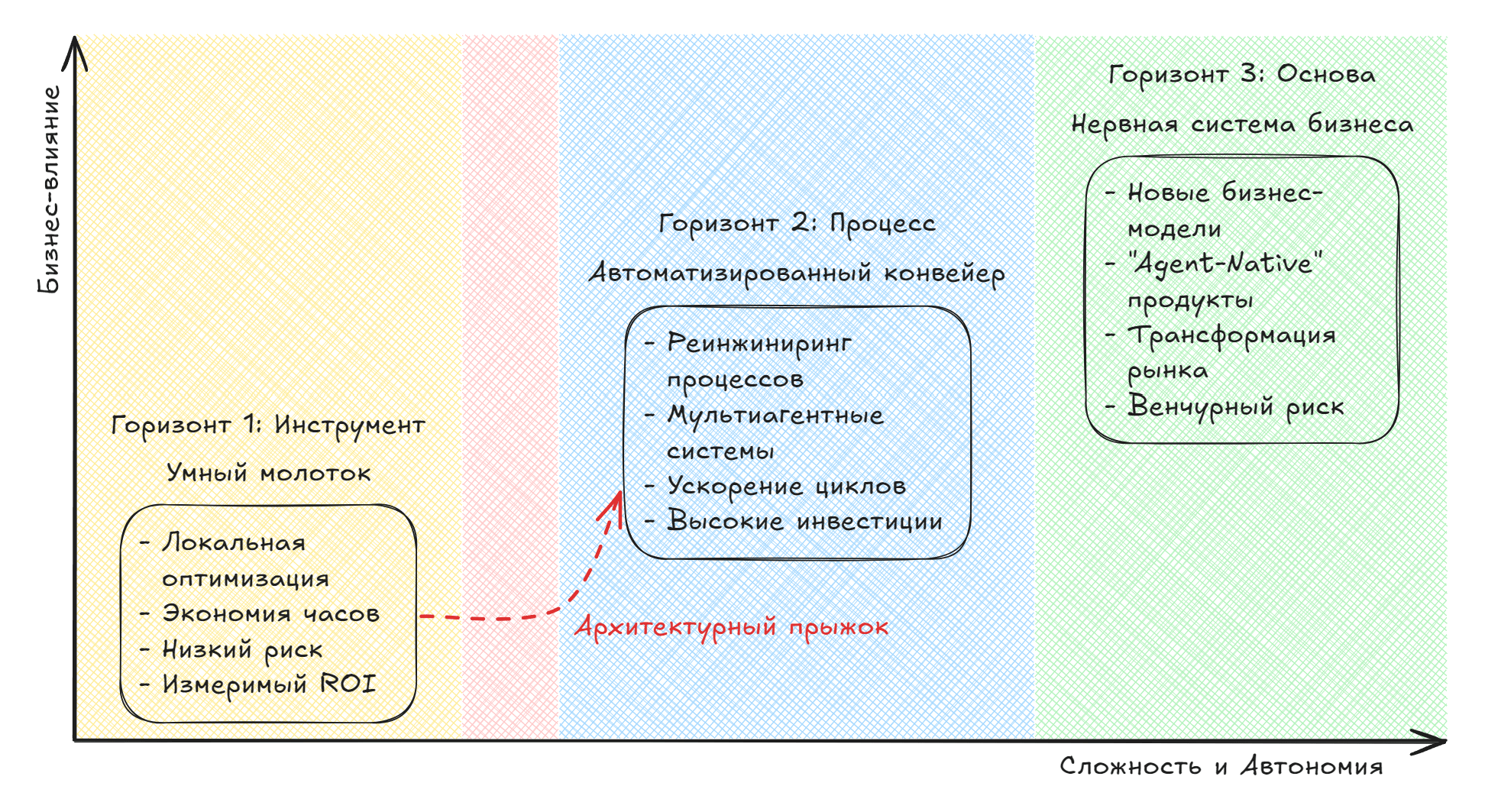

So, we have agreed on the terms. Now the most interesting part begins. To break free from the grip of hype and start making strategic decisions, we need the right analytical tool. Most discussions today revolve around technologies: which framework to use, which model to choose, how to write a prompt. This is tactical fuss. I propose changing the optics and classifying agent implementation projects not by technology, but by the level of business ambition.

For this, I have developed the "Three Horizons" framework. Imagine a coordinate system: on the horizontal axis — "System Complexity and Autonomy," on the vertical — "Business Impact." In this coordinate system, all AI agent projects can be divided into three clear zones, three horizons.

Horizon 1: Agent-Tool ("Smart Hammer")

This is the starting point, the most understandable and accessible level. Here, agents act as "smart hammers" — tools we give employees for local optimization of their work. The goal is not to restructure the business, but to increase individual productivity by automating routine, but time-consuming tasks.

- Goal: Local optimization and resource savings.

- Examples: This is a world of clear and easily measurable victories. An agent that automatically processes incoming invoices and enters them into the system. A script that helps in data migration between legacy systems. Developer tools like early versions of Cursor or v0, generating boilerplate code. Or an agent that writes drafts of personalized email newsletters for the marketing department.

- ROI: Calculated simply — in human-hours saved. We spent X on development, and saved Y hours of work for lawyers, accountants, or developers.

Horizon 2: Agent-Process ("Automated Conveyor Belt")

If the first horizon is about providing workers with improved tools, the second is about completely overhauling the assembly shop. Here, we move from single agent-tools to creating multi-agent systems (MAS) that can manage entire end-to-end business processes. This is no longer a "smart hammer," but an "automated conveyor belt."

- Goal: Re-engineering and accelerating the entire business process, from start to finish.

- Examples: Imagine a system for onboarding a new client. One agent (qualifier) meets the lead, another (analyst) enriches it with data from open sources, a third (router) analyzes needs and automatically transfers a fully prepared client card to the most suitable sales manager. Or a complex system for supply chain management, where different agents coordinate inventory, logistics, and procurement in real time.

- ROI: More difficult to calculate, but its impact is significantly higher. We are no longer measuring hours saved, but the acceleration of the entire time-to-market cycle, increased fault tolerance, and throughput of the entire process.

Horizon 3: Agent-Foundation ("Business's Nervous System")

This is the highest, almost visionary level. Here, agents are not just part of the process, they become its foundation, its nervous system. On this horizon, new, "Agent-Native" business models emerge that would be fundamentally impossible without the deep autonomy and coordination of AI systems.

- Goal: Creation of revolutionary business models and capture of new markets.

- Examples: This is currently the territory of bold experiments. A fully autonomous digital agency, where a multi-agent system itself finds clients, develops strategies, creates content, and manages advertising campaigns. Or a smart city management system, where agents coordinate traffic, energy consumption, and the work of utility services. In medicine, this could be a system that continuously monitors thousands of patients and autonomously adjusts treatment plans based on incoming data.

- ROI: Measured not in money or hours, but in market share, the creation of entirely new value, and tectonic shifts in entire industries.

Now that you see this map, ask yourself a simple question: which horizon is your company on?

- Are you automating individual tasks to save time? (Horizon 1)

- Are you building complex, multi-stage systems for end-to-end processes? (Horizon 2)

- Are you creating business models that are entirely dependent on AI autonomy? (Horizon 3)

The answer to this question is critically important, and here's why.

Case: Anna's team successfully launches its first project: an agent for automatic generation of compliance reports. This is a clear victory on Horizon 1. ROI is calculated in saved lawyer hours, everyone is happy. But Anna, looking at the new diagram, feels a slight unease. They just accelerated an old process. They are still using a "smart hammer," while competitors may already be building a "conveyor belt."

"The First Horizon Trap"

The success of Anna's team on the first horizon is not an exception, but the rule. That is why this horizon is so dangerous. It sucks you in. Most companies, having started their journey into the world of AI agents, risk getting stuck at this level forever, falling into what I call the "First Horizon Trap."

Why is it a trap? Because it is comfortable and understandable. Local optimization is that "low-hanging fruit" that brings quick, measurable victories and enthusiastic reports for investors. ROI is easy to calculate in saved hours, the project does not require fundamental restructuring of business processes, and risks seem manageable. The company feels innovative without essentially changing anything. But it is this comfort that hinders strategic development. By optimizing individual links of the old chain, you will never create a new conveyor belt.

The transition from the first horizon to the second is not a smooth ascent, but a leap across a chasm. I call this the "Architectural Leap." And to make it, you need to overcome the walls of the trap, which I have defined for myself as "five horsemen" — five fundamental barriers, each capable of burying even the most ambitious project.

1. Reliability ("Hallucinations"). This is the first and most obvious horseman. LLMs, which are the basis of agents, tend to invent facts. But if for a chatbot this is just an annoying error, for an agent performing a chain of a dozen actions, it is a catastrophe. An error made at the first step accumulates and amplifies at subsequent ones, like a tiny deviation in spacecraft navigation that ultimately leads to missing the target by millions of kilometers. Moreover, as recent studies show, hallucinations open up new attack vectors. For example, an agent can "hallucinate" a non-existent but logically named software package. Attackers can pre-register such a package with malicious code (a "slopsquatting" attack), and a developer, trusting the agent, will mistakenly install it, opening a security breach.

2. Cost. The second horseman hits the wallet. Developing and operating agents is expensive. According to market analysis, creating even a simple agent for routine tasks will cost $10,000 – $20,000, and complex enterprise systems for the second horizon can cost from $100,000 to $250,000 and higher. And these are only initial investments. Monthly expenses for support, API calls, and retraining can amount to another $1,000 – $10,000. Some platforms try to offer alternative models (for example, $0.99 for one successfully resolved support ticket), but the fact remains: transitioning to the second horizon requires serious and, more importantly, constant investments.

3. Legal Liability. This horseman comes into companies with lawyers. The idea of complete autonomy is a myth when it comes to responsibility. The recent case with Air Canada is indicative, where a chatbot promised a client a discount. The court ruled that the airline bears full responsibility for the errors of its AI. This precedent clearly states: the company is responsible for all actions of the agent. Therefore, any second-horizon project requires complex mechanisms of control, oversight, and the possibility of instant "manual" intervention.

4. Debugging Complexity. The fourth horseman attacks engineers. The "black box" nature of neural networks turns debugging an agent into a nightmare. If in traditional code you can trace the logic of decision-making, it is almost impossible to understand why an agent consisting of billions of parameters turned "left" instead of "right" in a specific situation. When a multi-agent system fails, finding the root cause turns into archaeological excavations without a map. In response to this problem, the industry began to create specialized platforms for MLOps. For example, the LangSmith tool from the creators of the LangChain framework allows tracking, visualizing, and debugging the entire chain of calls within an agent. It works like a "flight recorder," logging every step, tool call, and model response, giving engineers that very map to find the source of the error.

5. Human Factor. The fifth horseman is the most insidious. It sits not in the code, but in the user's chair. Even a technically perfect agent is useless if a person cannot clearly and unambiguously formulate a task for it. As noted, people are often "poor communicators." We give incomplete, ambiguous, or contradictory instructions. If even simple chatbots constantly misunderstand us, what can be said about a complex system that needs to be delegated an entire business process?

These five horsemen clearly manifested themselves in the next chapter of Anna's story.

Case: Inspired by success, Anna launches a pilot for Horizon 2: an agent for preliminary underwriting of loan applications. The project fails. The model begins to "hallucinate" on atypical data (Horseman #1). Lawyers raise alarm due to potential liability (Horseman #3). Engineers cannot understand why the agent made a particular decision (Horseman #4). Anna realizes they have hit a wall. They are in the "first horizon trap."

The New Economy of Results

The failure of Anna's pilot project is not just a setback, but a fundamental lesson. It forced her to confront the main question: why even risk moving to the second horizon if the first is safe, understandable, and brings measurable benefits?

The answer lies in the fact that AI agents, for the first time in history, allow businesses to make a tectonic shift: to move from an "economy of tools" to an "economy of results."

- Economy of Tools (World of Horizon 1): Here, the vendor sells you a "smart hammer" — software with a set of impressive features. They guarantee that the hammer is well-made, but bear no responsibility for whether you can use it to hammer a nail straight without damaging the wall. All responsibility for the final result lies with you, the client. Value in this world is measured by the length of the feature list.

- Economy of Results (World of Horizon 2 and 3): Here, the vendor sells not a hammer, but a "finished hole in the wall." They take full responsibility for the final result. Their business model is built on a guarantee: "We will reduce your client onboarding time by 30%" or "We guarantee a 20% reduction in logistics costs." Value is measured not by features, but by specific, achieved business indicators (KPIs).

It is AI agents, thanks to their autonomy and ability to act, that make this model possible. This is the first "drill" that can come to your home itself, choose the right drill bit, consider the wall material, and guarantee a perfectly straight hole without your intervention.

This transition is precisely that "quiet revolution." Its essence is not just in automating routine tasks. Its essence is in the quiet, almost imperceptible drift of entire industries from one economic paradigm to another. Companies that are the first to master this new model will gain a real, long-term competitive advantage. They are already doing this: in healthcare, multi-agent systems do not just "analyze data," but provide a result in the form of continuous patient monitoring; in finance, they do not just "provide a trading interface," but guarantee a result in the form of algorithmic strategy execution.

The competitive advantage of the future is not in giving the client the best tool, but in taking over the entire problem for them. Is your business ready to sell not software, but results?

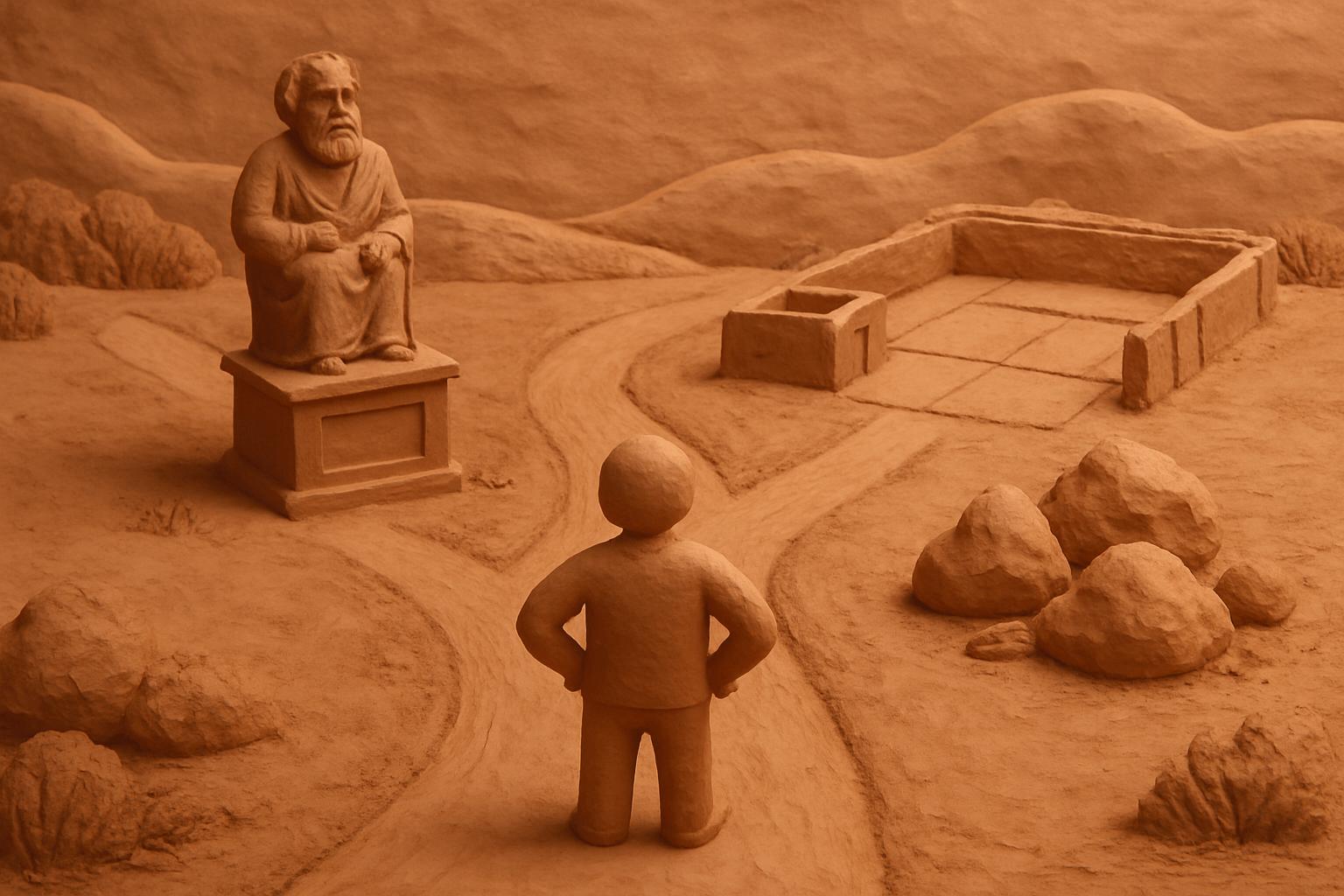

Your Choice — Master or Architect?

Anna's dilemma, with which we began this article, is no longer a vague question of "should we implement AI." Now that we have the "three horizons" map and an understanding of the new economy, the main question is different: who do you want to become?

The strategy for working with AI agents is not a technical choice, but a philosophical one. A company must decide who it wants to be: a highly effective "Master," perfecting existing processes, or a bold "Architect," building fundamentally new business models. Both paths are viable, but they require completely different approaches, investments, and metrics of success.

The Master's Path (Strategy for Horizon 1)

- Goal: Maximum efficiency within the current paradigm. You don't change the game, you become the best at it.

- Tactics: Create a "Routine Map" — find all repetitive, low-risk tasks with clear ROI. Focus on "human-hours saved" metrics. Build a team of "AI implementers" who will quickly pick "low-hanging fruit" using ready-made no-code/low-code solutions.

- Warning: This path is safe and brings quick returns. But it carries the strategic risk of falling into the "first horizon trap" and, in the long run, losing to architect competitors who will sell your clients not tools, but guaranteed results.

The Architect's Path (Strategy for Transition to Horizon 2)

- Goal: Building long-term competitive advantage through the creation of a new, "results-oriented" business model.

- Tactics: Invest in the foundation — create unified, "agent-ready" APIs and data repositories. Think not in tasks, but in business results that can be packaged as a product and sold. Build a team of "business architects," not "prompt engineers." Start measuring success in new metrics, for example, "percentage of revenue from 'results-oriented' contracts."

- Warning: This path is high-risk, expensive, and its ROI is not obvious in the short term. It requires patience and the strategic will of management. But it is the only path to creating breakthrough products and gaining leadership in the new economy.

A Master perfects their "smart hammer." An Architect designs the entire factory where these hammers are no longer needed.

Case: After the pilot's failure, Anna goes to her CEO. She doesn't ask for money for "another AI project." She lays two plans on the table. Plan A ("Master"): "We can save 5000 hours of work in a year by automating 10 more routine processes." Plan B ("Architect"): "With eighteen months of infrastructure investment, we can launch a new product: 'Guaranteed Scoring in 60 Seconds,' and sell not access to the system, but the result itself." She doesn't offer an easy answer. She offers to make a conscious strategic choice.

The future belongs to AI agents. But the main question is not which horizon you will end up on, but who you choose to become: the best Master with the "smartest hammer" or the Architect of a new economy of results?

Stay curious.