AI

RAG

Smart Search for Your Notes: How to Build a Conversational Second Brain with RAG

I think many of you are familiar with this feeling. You are a digital Plyushkin, a modern-day collector of ideas. Your Notion, Obsidian, or any other note-taking tool has transformed from a neat card catalog into a veritable digital warehouse. You meticulously save everything there: article clippings, podcast insights, code snippets, fleeting thoughts about future projects. You are convinced that you are amassing a treasure trove, a personal Google that will one day answer any question you might have. But time passes, and you realize with horror that you have built not a treasure trove, but a graveyard. A graveyard of dead ideas.

My personal knowledge base has become precisely such a place. Hundreds, if not thousands, of notes connected only by fragile threads of tags and backlinks. And then comes the moment of truth: I distinctly remember reading a brilliant article about applying game development optimization techniques to rendering large datasets on the web about six months ago. The idea was ingenious, and I definitely saved it. I open the search and... I'm stumped. What should I search for? "Optimization"? "Game dev"? "Rendering"? Each of these queries returns dozens of irrelevant notes where these words are just mentioned in passing. Keyword search proved useless because the value of that note wasn't in the words, but in the conceptual link between seemingly distant fields.

At that moment, I fully realized the scale of the problem. My knowledge base had turned into that giant warehouse from the end of Indiana Jones. Somewhere in there, among endless rows of identical boxes, between an article clipping on quantum computing and an architectural sketch for a pet project, lies the "Ark of the Covenant" itself – the thought I needed. But to find it, I need to manually open every box. A task for a whole day, or even a week. The warehouse catalog (i.e., my search) was useless. I needed not just a catalog, but a smart curator. A keeper who knows not only what is in the boxes, but also how the contents of one box relate to another.

This was a sobering realization. The problem wasn't with the tool or the amount of information. The problem was with the very approach to interacting with it. I realized that I needed to stop searching my notes. I needed to learn to talk to them. To ask them complex, contextual questions and receive synthesized, meaningful answers, not just a list of links. This became the beginning of my journey into the world of RAG, and now I'll show you the map I followed.

The Anatomy of RAG. A Quick-Serve Kitchen

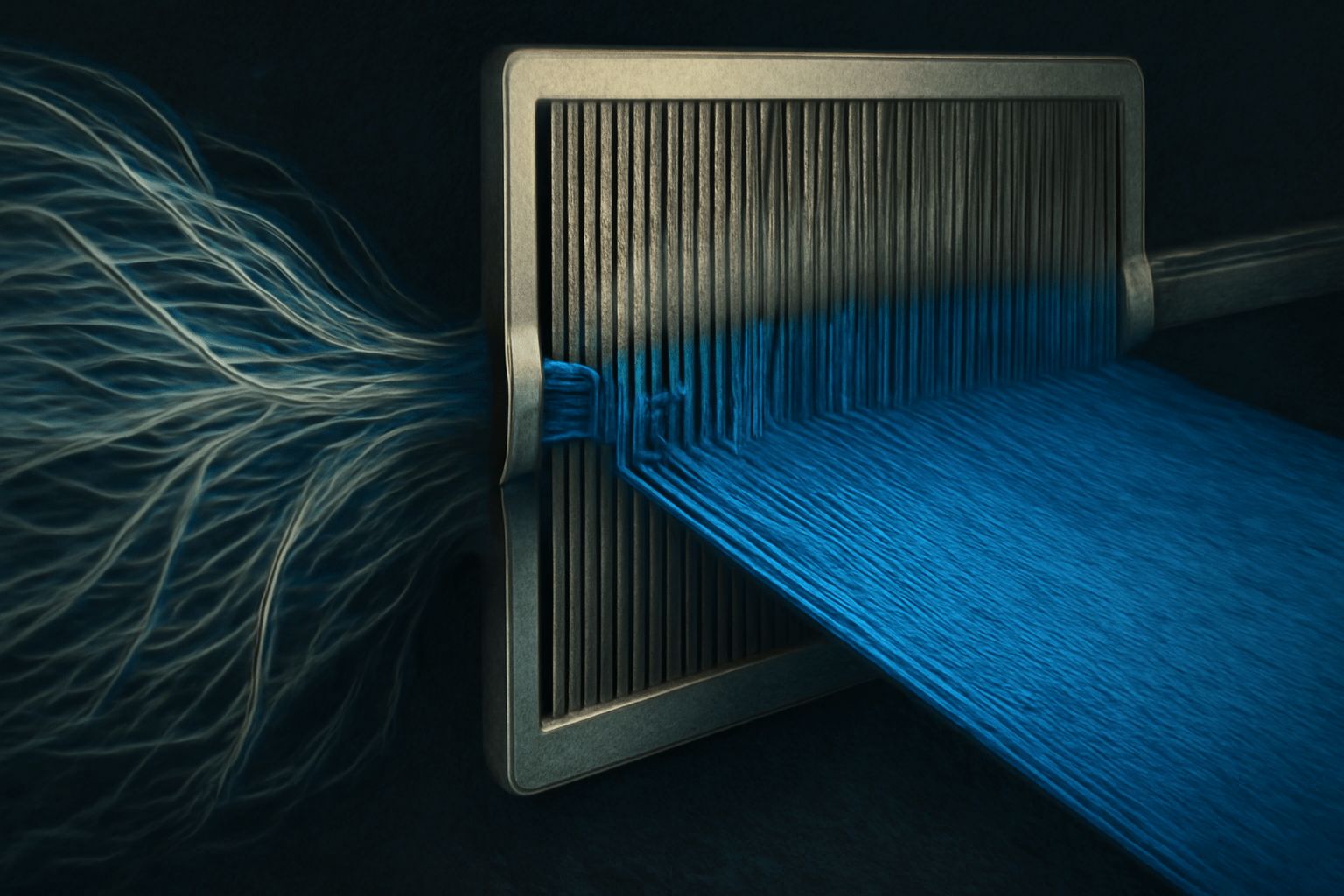

So, I've shown you the starting point of my journey – the realization of the problem. Now let's move on to the first point on the map: the basic architecture of Retrieval-Augmented Generation. At first glance, this acronym might suggest something from the arsenal of Google or OpenAI, but I assure you, the core idea is ingeniously simple. To understand it, imagine you're hiring a team of two specialists: a meticulous Librarian and a creative Chef.

The Librarian (Retrieval) is your search mechanism. Their task isn't just to find books by title, but to understand the essence of your query and retrieve precisely those pages from your entire giant library that are most relevant to the answer. They create nothing new; they only masterfully find the necessary information.

The Chef (Generation) is a large language model (LLM). They can't go to the library themselves. If you ask them something that isn't part of their "general education," they'll either honestly admit ignorance or (worse) start making things up. But give them a few relevant pages that the Librarian brought, and they will work wonders: they'll analyze them, find connections, synthesize a new thought, and present it to you as a coherent, unique answer.

The entire RAG process is their coordinated effort. You ask a question. The Librarian rushes to your knowledge base (vector store), finds 3-5 of the most suitable text fragments, and places them on the table in front of the Chef. And the Chef, based only on these fragments and your question, prepares the final answer.

On a technical level, you can build such a prototype in literally an evening. Here's what my first pipeline looked like, described in pseudocode:

# Step 1: Loading and Chunking

# First, we "feed" all our notes to the system

documents = MyNotesLoader().load_all()

# Then we divide them into small, semantically related pieces (chunks)

chunks = RecursiveCharacterTextSplitter(chunk_size=1000, chunk_overlap=100).split_documents(documents)

# Step 2: The Librarian's Work (Indexing)

# Convert each chunk into a numerical vector (embedding) reflecting its meaning

embedding_model = HuggingFaceEmbeddings(model_name="all-MiniLM-L6-v2")

# And create a "card catalog" for quick semantic search - a vector database

vector_store = Chroma.from_documents(documents=chunks, embedding=embedding_model)

# Step 3: The Chef's Work (Generation)

# Configure our language model

llm = ChatOpenAI(model_name="google/gemini-2.5-flash", temperature=0)

# And create a chain that links the Librarian and the Chef

qa_chain = RetrievalQA.from_chain_type(llm, retriever=vector_store.as_retriever())

# And now we can "talk" to our notes!

question = "How can ideas from game development help optimize data rendering on the web?"

answer = qa_chain.run(question)

This is our "quick-serve kitchen." It's simple, fast, and you can already make an excellent "sandwich" from your data with it. When I first ran this code and asked the very question I couldn't answer manually, the system provided a clear summary from the relevant article in seconds. Magic. Honestly, for 80% of personal projects and many small tasks, this can be absolutely sufficient.

But if you want to open a restaurant... you'll quickly face complaints from customers. Let's take a look at my "complaint book."

The Hall of Failures. When Things Go Wrong in the Kitchen

The euphoria from the first successes of my "quick-serve kitchen" quickly faded. Yes, on simple, "ideal" questions, the system worked like clockwork. But as soon as I started testing it on more complex, ambiguous queries, utter chaos erupted in the kitchen. The answers became unpredictable, and sometimes downright useless. I started keeping my "complaint book," and soon three typical, painfully recognizable failure patterns emerged. I called them my personal "Hall of Failures."

"The Parrot"

The first and most frequent guest in this hall was the Parrot. The problem looked like this: I would ask a question requiring the synthesis of information from multiple sources or at least a comprehension of a single one, and in response, I would get... a verbatim quote from one of the chunks. For example, I asked: "What is the trade-off between speed and accuracy in nearest neighbor search algorithms, according to my notes?" I expected a structured answer with pros and cons. Instead, the system simply spat out a paragraph from an article where these words were mentioned. This wasn't a conversation; it was an improved grep. The Chef wasn't cooking a dish; they were just dumping a can of preserves onto the plate. The value of the model's generative part approached zero.

"The Fantasist"

This character was far more insidious and dangerous than the Parrot. The Fantasist's answers looked smooth, convincing, and on point. But upon closer inspection, it turned out to be artful misinformation. Here's what happened: the Librarian would bring the Chef several relevant but incomplete text fragments. The language model lacked sufficient data for an exhaustive answer, and instead of admitting this, it would start "fabricating" missing details, mixing information from my notes with its general, pre-trained knowledge. For instance, I asked about a specific implementation of the "Circuit Breaker" pattern in our project, about which I had a note. The answer contained correct service names from my note but described their interaction using a canonical example from Martin Fowler's blog, which was nowhere to be found in my note. It's like your Chef, unable to find basil in the fridge, decided to secretly add parsley to the dish, hoping you wouldn't notice the substitution. Such answers undermine trust in the system most severely.

"The Know-it-all"

The last exhibit in my hall is the Know-it-all, suffering from an inability to say "I don't know." I would ask a provocative question about something definitively not in my documents: "What is my opinion on the Svelte framework?" I had never written anything about it. The ideal answer would have been: "There is no information about the Svelte framework in your notes." But instead, the Know-it-all, ignoring the context of my documents, delved into its general memory and produced a standard Svelte overview, which can be found in the first Google search result. It violated the main RAG contract: to answer based on the provided data. This transformed my personal assistant into just another ChatGPT interface, rather than an expert on my knowledge.

Simply knowing about the problems isn't enough. How do you understand what exactly "poisoned" your answer in each specific case? Where did the breakdown occur: did the Librarian bring the wrong books, or was the Chef incompetent? For this, we need diagnostic tools.

Doctor RAG. My Diagnostic Kit

After spending enough time wandering through my "Hall of Failures," it became obvious: looking at bad answers is like looking at a smoking engine. Interesting, but utterly useless without tools to peek under the hood. I needed a way to move from merely stating symptoms ("the answer is parroting") to making a diagnosis ("which system component failed?"). And I realized that working on RAG is not programming; it's medicine. You listen to "symptoms" (bad answers), conduct "tests" (look at the retrieved chunks), and only then make a diagnosis: is the problem with the Librarian (Retrieval) or the Chef (Generation)?

So I assembled my own diagnostic kit, consisting of two simple yet incredibly powerful tools.

Tool 1: "The Stethoscope"

My first tool I called "The Stethoscope." This is a basic but essential instrument for any "Doctor RAG." Essentially, it's just a fixed list of 10-15 "control questions" that I run after every change in the system. This list is my regression test, my pulse monitor. It includes:

- A few "golden" questions that the system is obligated to answer perfectly.

- A couple of questions for synthesizing information from different notes.

- A couple of questions at the limits of search capabilities, to test the Librarian's accuracy.

- An obligatory "Know-it-all" question, about something definitely not in the data, to ensure the system still knows how to say "I don't know."

Every time I changed the chunk size, embedding model, or LLM temperature, the first thing I did was "listen" to the system with this stethoscope. This immediately showed whether I had "fixed" one thing by "breaking" another.

Tool 2: "The Answer Autopsy"

But the real breakthrough happened when I added the main diagnostic tool to my arsenal – "the answer autopsy." The idea is simple: the model's final answer is the tip of the iceberg. To understand why it's like that, you need to see its base. I modified my code so that for every query, the system returned not only the generated text but also the original chunks that the Librarian provided to the Chef. In most frameworks, such as LangChain, this is done by adding a simple flag, for example, return_source_documents=True.

And this changed everything. Now I could conduct a real post-mortem examination of every failed answer.

- Symptom: "The Parrot." The autopsy showed that the Librarian found only one, but very large and relevant chunk. The Chef simply had no other material for synthesis and chose the safest route – copying what they were given. Diagnosis: Problem in Retrieval. Chunks are too large or poorly cut.

- Symptom: "The Fantasist." The autopsy showed that the Librarian brought 4 chunks. Two of them were on-topic, while the other two were only indirectly related to the query (contained the same keywords but in a different context). The Chef, like a diligent student, tried to weave a story from everything they were given and ended up mixing truth with "noise." Diagnosis: Problem in Retrieval. Low search precision; need to better filter out irrelevant documents.

- Symptom: "The Know-it-all." The autopsy showed the most interesting thing: the list of source documents was empty. The Librarian found nothing. My prompt did not contain explicit instructions on what to do in this case, and the LLM, receiving an empty context, simply answered the question from its general knowledge base. Diagnosis: Problem in Retrieval and prompt engineering.

It was this "Doctor House" approach that allowed me to understand that in 9 out of 10 cases, the root of the problem lies not in hallucinations or the Chef's (LLM's) laziness. They almost always honestly try to prepare a dish from the ingredients they are given. Most problems lie on the Librarian's side. It's time to improve our kitchen.

Upgrading the Kitchen. From Cafeteria to Restaurant

Armed with my diagnostic kit, I gained a clear understanding: my Librarian was diligent but not very astute. They needed a serious upgrade. This wasn't a chaotic replacement of everything in the hope that something would work. This was targeted surgery, where each improvement was a specific cure for a particular "illness" from my "Hall of Failures." I began transforming my simple cafeteria into a real restaurant.

These are the upgrade levels for our restaurant. The first and most important upgrade was aimed at combating "The Parrot." The diagnosis showed that the problem lay in chunks that were too large and "ragged." My naive RecursiveCharacterTextSplitter cut text by a formal criterion—the number of characters—often breaking a thought mid-sentence. The Chef received one huge, raw piece of information and, having no alternatives, simply served it.

The cure is semantic chunking. Instead of cutting text with a blunt knife along a ruler, this approach uses the embedding model itself to find semantic boundaries. It groups sentences that are close in meaning, creating atomic, self-contained fragments of meaning. This is like replacing blunt knives with sharp Japanese ones. Now the Librarian brought the Chef not one large piece, but several small, perfectly sliced, and meaningful ingredients. This gave the LLM room to maneuver, the ability to combine ideas rather than simply quote. The "Parrot" problem was partially resolved.

Next in line was the insidious "Fantasist." Their illness was more complex: the Librarian brought not only quality ingredients to the kitchen but also various "noise"—documents that were only indirectly related to the topic. To defeat "The Fantasist," a combination of improvements was required.

Firstly, I changed the embedding model. General-purpose models like all-MiniLM-L6-v2 are good for a start, but they don't know the specifics of my notes. I switched to specialized embeddings that are better "tuned" for technical texts. This is like training staff to understand exotic ingredients. Instead of considering all green leaves as lettuce, they start distinguishing arugula from spinach. The accuracy of the initial search (retrieval) significantly improved.

But the main weapon against "The Fantasist" was the Re-ranker. This is a fundamentally new team member in my kitchen—a strict sous-chef. Their job is simple but critically important. After the Librarian brings the initial batch of, say, 10 potentially relevant documents, the sous-chef re-ranker meticulously inspects each one. They use a more complex and "expensive" model to evaluate how well each document truly answers the original question. Then, they ruthlessly discard half, leaving the Chef with only 3-5 of the best, cleanest ingredients. This is a powerful filter that screens out "noisy" sources before they enter the prompt and trigger "fantasy" in the LLM.

From Personal Hobby to Business Strategy

My personal project was, in essence, a laboratory exercise, a testing ground for ideas. But after assembling a team in my kitchen capable of competing for a Michelin star, I couldn't help but wonder: how can this approach be scaled from a single "home kitchen" to an entire "food factory" for a medium-sized technology company? The lessons learned from my project allow me to accurately design what this would look like in business. Let's conduct this thought experiment.

The Problem. The Universal "Knowledge Search Tax"

In every company I've worked for, there's a huge, invisible, and ruthless hidden tax on productivity—the time employees spend searching for scattered information. Technical documentation lies in Confluence, tasks and discussions in Jira, informal but critically important dialogues in Slack, and official policies and templates on Google Drive. A corporate knowledge base is not a single continent, but an archipelago of islands with no bridges between them. Everyone pays this "search tax" every day. But what if we can build bridges and create a single transportation hub?

Project Proposal. The "Company's Digital Concierge"

The idea is to create a "digital concierge" based on the principles we refined in my "kitchen." This is not just a chatbot. This is a virtual employee available 24/7 who has read absolutely all company documents and is ready to instantly provide a synthesized summary for any question, from "How do I apply for health benefits?" to "Show me a code example for authentication in our API." Such a system would change the very approach to working with knowledge: from the agonizing question "WHERE can I find this?" to the productive query "EXPLAIN to me how this works, based on our documents."

Business Case. From Investment to ROI

Any good idea needs a "back-of-the-napkin calculation." Let's do it right now, just a bit more detailed. Implementing corporate RAG is not a leap into the unknown but an ascent of a mountain with three base camps. Each camp is already a working product that delivers value.

Part 1: Roadmap and Investment (Cost of Entry)

We won't build a spaceport in a year. We will break the project down into manageable phases.

- Phase 1: MVP ("Quick-Serve Kitchen").

- Task: Connect 1-2 of the most problematic sources (e.g., Confluence and HR knowledge base). Create a basic RAG interface and roll it out to a focus group of 10-15 most active employees.

- Goal: Quickly prove the viability of the idea, gather initial feedback, and demonstrate real value with live examples.

- Time Estimate: 3-4 weeks of work for a team of 1-2 engineers.

- Phase 2: Pilot Implementation ("Restaurant Upgrade").

- Task: Expand the solution to an entire department (e.g., the entire IT department). Connect more sources (Jira, Google Drive), implement advanced techniques we discussed—Re-ranker for accuracy, hybrid search. Create a user-friendly UI and the "Doctor RAG kit" for monitoring answer quality.

- Goal: Create a reliable, accurate, and scalable tool that users truly love.

- Time Estimate: Another 6-8 weeks of work.

In total, the overall investment in creating a fully functional pilot solution, ready for company-wide deployment, is approximately 3 months of work for a small engineering team. These are our full costs. Now let's look at the return.

Part 2: Benefit Calculation and Break-Even Point

Let's calculate for a hypothetical company of 100 employees.

- Investment: Let's take a team of three people (two engineers, one part-time manager/analyst). 3 months is approximately 12 weeks. 12 weeks * 40 hours/week * 3 people = ~1440 person-hours. This is our investment.

- Problem: According to many studies, employees spend up to 20% of their working time searching for information. Let's be conservative and take 2.5 hours per week per person.

- Forecast: Our "digital concierge" reduces this time by 80%, saving each employee 2 hours per week.

- Weekly Savings: 2 hours * 100 employees = 200 hours.

- Monthly Savings: 200 hours * 4 weeks = 800 hours.

And now for the most interesting part. With savings of 800 hours per month, our initial investment of ~1440 hours is fully recouped in less than two months from the moment the system is fully launched.

It's like building a solar power plant. First, you invest in panels and installation. But after a few months, it pays for itself, and you start getting virtually free energy for years to come. RAG is your "solar power plant" for productivity.

A project that pays for itself in one quarter and then generates thousands of hours of savings annually is not an expense but one of the smartest assets a business can invest in today.

Architectural Blueprint for Your RAG Project

We just conducted a thought experiment and saw how a project born from personal pain can turn into a powerful business asset with a return on investment in just a few months. You might have thought that the path from a "quick-serve kitchen" to a company's "digital concierge" is a complex and risky R&D project. But all my experience screams the opposite. Success in building RAG systems is not the result of a brilliant epiphany, but a consequence of a disciplined iterative process.

I have walked this path and compiled all my findings into a concise, reproducible algorithm. Let's call it "The RAG Builder's Blueprint". This is not a rigid recipe to follow blindly. Rather, these are the principles of a chef: start simple, test at each stage, improve precisely. This cycle is your most reliable tool for building a system of any scale.

Step 1. Start (The Quick-Serve Kitchen) Don't try to build a spaceship right away. Start with a bicycle. Assemble the simplest RAG, as we did in the second act: a data loader, a basic splitter, a standard embedding model, and any available LLM. Don't spend more than a few days on this. Your goal at this stage is not to get perfect answers, but to create a working prototype that you can start testing. This is your zero kilometer, your starting point.

Step 2. Test (The Complaint Book) Now that you have a working, albeit imperfect, system, start breaking it. Compile your "complaint book"—a list of 10-20 real, complex questions on which your system makes mistakes. This is where the "Parrots," "Fantasists," and "Know-it-alls" are revealed. This set of questions will become your "Stethoscope"—the main tool for regression testing at all subsequent stages.

Step 3. Diagnose (The Doctor's Kit) Don't rush to fix it! First, make a diagnosis. For each bad answer from your "complaint book," conduct an "autopsy." Make the system show you not only the final answer but also the original chunks that the Librarian passed to the Chef. In 90% of cases, you will see the root of the problem right here. Did the Librarian bring irrelevant documents? Problem in retrieval. Did they bring relevant ones, but the LLM ignored or distorted them? Problem in generation or the prompt. This step is the most important in the entire cycle.

Step 4. Upgrade (Improving the Kitchen) Only after making a diagnosis can you apply treatment. And the main rule here is one change at a time. If your diagnosis is "poor chunk quality," try changing the chunking strategy to semantic. If the diagnosis is "too much noise in search results," try adding a Re-ranker. Don't change everything at once. Implement one improvement—and immediately return to Step 2 to run the tests.

Step 5. Repeat Go back to your "complaint book" and run the tests. Has it improved? Excellent, record the result and move on to the next problem. Has it gotten worse? Revert the change and try a different hypothesis. This cycle of "Test → Diagnose → Upgrade → Repeat" is the engine of your project. It transforms RAG development from witchcraft into an engineering discipline.

My archive came alive thanks to this approach. But, as our thought experiment shows, the same technology and the same iterative process can bring entire companies to life, transforming their scattered archives into a collective intelligence.

The Kitchen of the Future

The architectural blueprint we've discussed is the design of the best kitchen we can build today. It allows us to transform dead archives into living, useful conversational partners, solving real problems and bringing measurable value. But, analyzing the most advanced research and projects in this field, I can tell you one thing: we have only just served the appetizers. The dishes already being prepared in the "kitchens of the future" will change our understanding of how we interact with information. Here are just three brief sketches of what awaits us beyond the horizon.

Multimodal RAG. Today, our Librarian works with text. But what if they could bring not only text clippings but also diagrams from PDFs, tables from reports, or specific timestamps from multi-hour video lectures? Imagine asking not "What does the documentation say about our architecture?" but "Show me the CI/CD pipeline diagram from the Q3 presentation and explain the role of this specific block." The system would extract the image, analyze it, and provide an answer based on visual and textual information simultaneously. This is a transition from the one-dimensional world of text to a full understanding of complex, composite documents.

RAG Agents. And what if our Chef could not only answer a question but also perform a task based on the found information? This is the next evolutionary leap. You ask not "How do I create a new repository in our GitLab according to company standards?" but "Create a new repository for the 'Phoenix' project with a basic template for a Python service." The system would find the internal regulations, understand the sequence of actions, generate the necessary API calls or CLI commands, and execute them on your behalf, returning with a report: "Repository created. Here's the link." This transforms the system from a passive advisor into an active digital assistant that takes on routine operations.

Self-Learning RAG. Finally, imagine a system that constantly learns from your feedback and gets better over time. Every time you "like" or "dislike" an answer, you're not just giving a rating – you're providing a training signal. The system can analyze these signals and draw conclusions: "When users ask about 'Authentication,' answers based on this document from Confluence get the highest ratings. I'll increase the priority of this source." Or "For questions about 'vacation policy,' I consistently provide inaccurate answers. I should flag the source documents as outdated and requiring review." The system stops being a static configuration and transforms into a dynamic, self-improving entity that adapts to the company's knowledge and needs.

We have only just begun writing the first chapter in the book of information interaction. The future promises to be incredibly interesting.

Stay curious.