AI

LLM

Basis

MoE vs. SSM: Two Paths to Escape the Transformer's Tyranny of the Square

The Transformer architecture is, without a doubt, ingenious. Its attention mechanism allowed models to see connections and dependencies in data with unprecedented depth. However, this design has an inherent flaw, a fundamental defect that has transformed from a technical detail into the main impediment to AI capable of true deep understanding. The name of this flaw is "The Tyranny of the Square".

Technically, this is described as `O(n²)` complexity: processing a sequence of `n` tokens requires `n*n` operations. In practice, this means that doubling the context length quadruples the amount of computation. This is not just expensive—it's a wall we've hit at full speed.

To grasp the scale of the problem, let's conduct a thought experiment. Imagine you are a "corporate archaeologist." Your task is to analyze your company's annual Slack and Confluence archives, consisting of millions of tokens, to find the root cause of a major project failure. You need to track shifting requirements, identify hidden conflicts, and find that very "Ariadne's thread" in this gigantic labyrinth of data. A classic Transformer here is like an archaeologist with a perfect magnifying glass but suffering from severe amnesia. It will flawlessly analyze a single message, understand its nuances and context within its tiny window of attention. But it is absolutely incapable of retaining the whole picture and connecting a decision made in January with its consequences in September.

The attention mechanism itself, where every token must "talk" to every other, can be called a "Royal Council." It is incredibly effective for a small circle of advisors, where all participants can communicate directly and arrive at an optimal solution. But when this "council" grows to hundreds of thousands, and then millions of members, it becomes catastrophically expensive and inefficient, leading to a complete system paralysis. We have learned to build brilliant sprinters, but the task requires us to be marathon runners. And this tyranny of quadratic complexity is forcing the entire industry to seek a way out.

The Economics of Instability

"The Tyranny of the Square" is not just an elegant formula from a Computer Science textbook, but a very real wall that has turned our technological breakthrough into an economic dead end. It's important to understand: the search for a replacement for Transformers is not an academic exercise or a chase after fads. It's a race for survival, because the economics of their scaling has reached a dead end.

Why is the entire industry so desperately seeking a way out right now? Because continuing on the old path means going bankrupt.

Every new percentage point of quality in SOTA (State-of-the-Art) models costs exponentially more. The training and inference costs for flagship models are already measured not in tens, but in hundreds of millions of dollars. The industry has reached a plateau where further "brute-force" scaling — simply increasing the number of parameters and data volume — becomes economically insane. We've learned to build increasingly powerful engines, but the cost of building and fueling them is growing so rapidly that soon only a few will be able to afford them.

This has posed the most important question for architects: how to create models that are not just larger, but fundamentally smarter and more efficient? How to escape the trap where every step forward requires geometrically increasing computational power? The answer came not as a single "solution," but in the form of two completely different, yet equally promising architectural philosophies that offer their own path out of tyranny.

"Divide and Conquer" — The Philosophy of Mixture-of-Experts (MoE)

The first path out of this tyranny is the philosophy of Mixture-of-Experts (MoE). At its core, it attempts to recreate in silicon one of the most effective principles of our brain: selective attention and specialization of neural circuits. Instead of engaging the entire gigantic neural network to process every incoming token, MoE models use a special "gate network" (or "router") that directs each token only to a few of the most suitable "experts" — small, specialized subnetworks. This is like the brain activating only the neural circuits responsible for logic to solve a mathematical problem, leaving those responsible for facial recognition untouched. This approach radically reduces the computational volume for each specific task while allowing the overall "knowledge capacity" of the model to grow to trillions of parameters.

Let's return to our corporate archaeologist. Armed with an MoE model, he is no longer a lone wolf with a magnifying glass. Now, he is the head of a team of specialists. When a code snippet lands on his desk, the model activates the "Python expert." When he analyzes a legal document, the "law expert" steps in. This architecture perfectly understands the essence of each individual document, achieving incredible depth in this regard. But, as with any real team, it has a weakness: it is still limited in its ability to connect document #1 with document #5000 if they belong to completely different areas and require different experts. The problem of quadratic complexity within the context window has not disappeared.

By September 2025, this approach was no longer exotic and had spawned a whole galaxy of powerful models shaping the industry landscape:

- MiniMax-M1 (MiniMax): As we discussed in the article, this is a landmark hybrid model combining MoE architecture (456 billion total parameters with 46 billion active) with an innovative "Lightning Attention" mechanism. Its main advantage is a gigantic context window of 1 million tokens, making it one of the most powerful open models for analyzing ultra-long documents.

- DeepSeek V3.1 (DeepSeek AI): Released in August 2025, this model is notable not only for its hybrid MoE architecture but also for its very flexible MIT license. Its key feature is two operating modes: fast for simple tasks and deep for complex reasoning, making it a versatile tool.

- Llama 4 Maverick & Scout (Meta): The latest generation of models from Meta, which has fully transitioned to MoE and become natively multimodal (text, images, video). Llama 4 Scout particularly stands out with a record context window of up to 10 million tokens, opening up possibilities for analyzing vast amounts of data like annual reports.

- GPT-OSS (OpenAI): A landmark return by OpenAI to releasing models with open weights. This family of MoE models (120 and 20 billion parameters) under the Apache 2.0 license has become a hit in the corporate environment, allowing powerful AI to be deployed on custom infrastructure without compromising privacy.

- Qwen3 (Alibaba): A series of advanced open MoE models positioned as a direct competitor to leading proprietary systems like GPT-4o. Thanks to high performance and the Apache 2.0 license, Qwen3 has become one of the pillars for business and scientific research in the open-source segment.

In the comments to the previous article on local LLM deployment, users highly praised the latter two models, noting that they represent the future for local application.

All of them use the same principle: scale the total number of parameters to achieve an unprecedented volume of knowledge, while keeping the number of active parameters at an acceptable level to reduce inference cost. It is important to understand: the impressive context windows in some of these models are achieved not by the MoE architecture itself, but by additional innovations, such as new attention mechanisms, which work in tandem with MoE. However, this elegance comes at a price. The main "engineering demon" of MoE is the colossal VRAM requirements. For the model to work, all experts must be loaded into GPU memory, even if only a few of them will be used for a specific token. This makes inference extremely resource-intensive and creates a serious barrier to widespread deployment. MoE solves the problem of computational complexity but creates a problem of infrastructural complexity.

"Remember Everything" — The Philosophy of State Space Models (SSM)

The "divide and conquer" philosophy radically increases efficiency, but leaves another, no less acute pain unanswered: how to effectively work with context that is measured not in thousands, but in millions of tokens? Remember our archaeologist. If an MoE model equips him with a team of narrow specialists, it still doesn't give him the ability to link two completely different documents together if they belong to entirely different areas and require different experts, with a year's worth of data in between. The problem of quadratic complexity within the attention window has not disappeared.

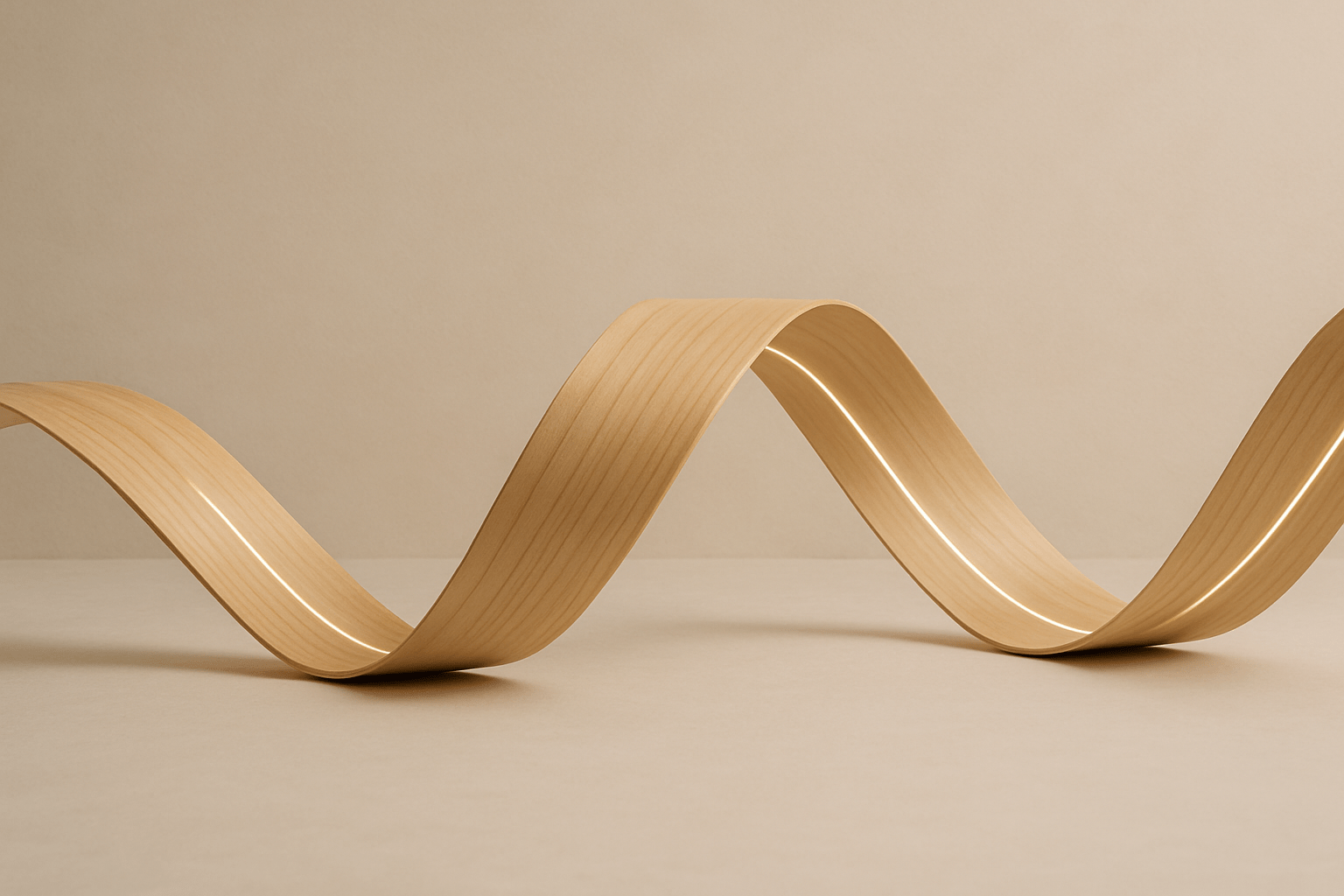

This is where a completely different philosophy comes into play—the philosophy of continuous memory, embodied in State Space Models (SSM). This architecture essentially mimics how our own consciousness works. We don't sift through all memories from the past to form a new thought; instead, all our past experience is compressed into a continuous "state" or "stream of consciousness" that influences our perception of the present moment. SSM models implement this principle in silicon, processing data with linear complexity (O(n)), which finally overthrows "The Tyranny of the Square."

For our archaeologist, SSM is not just a new tool; it's a breakthrough. Instead of re-reading the entire archive to find connections between every message, the model reads the entire dataset from beginning to end, like one long story, constantly updating its internal "understanding" of the project. It can spot that very message sent in January and connect it to consequences that will manifest in September—this is how it finds that thread in the chaos of millions of documents. This approach transforms the search problem into a continuous update of knowledge, rather than a combinatorial search.

The efficiency of SSM models is not just theory. The first major breakthrough in this direction was achieved with the advent of the Mamba architecture. Its key innovation is the "selective state space" mechanism, which allows the model to dynamically adapt its parameters based on the incoming data stream. More simply, Mamba knows how to selectively focus on important information and discard irrelevant "noise," making it ideal for working with extremely long sequences where key signals can be buried under tons of clutter.

The emergence of Mamba opened up a whole new horizon. If MoE models focus on the efficiency of token processing, SSMs focus on the efficiency of memory. Linear complexity paves the way for analyzing infinite data streams, making them ideal for domains such as audio processing, log analysis, or genome sequencing.

By September 2025, this philosophy had already spawned a whole family of powerful architectures:

- Mamba-2: This is not a commercial product, but a fundamental open-source architecture that serves as the technological base for the entire SSM direction. Mamba-2 is the most advanced "pure" SSM model, demonstrating performance on par with transformers with significantly greater efficiency.

- Nemotron-H (NVIDIA): This is a family of hybrid models (Mamba-Transformer) developed by NVIDIA to achieve maximum inference efficiency. By replacing up to 92% of expensive attention layers with ultra-fast Mamba-2 blocks, engineers achieved a 3x speedup compared to similar transformers (e.g., Llama 3.1), while maintaining high quality. Thanks to optimization for NVIDIA GPUs, Nemotron-H has become a key tool for tasks requiring high throughput and long context handling.

- Jamba (AI21 Labs): The first widely known "hybrid monster" that successfully combined SSM layers (based on Mamba), classic Transformer blocks, and MoE layers. It proved the viability of such an approach, taking the best from three worlds: SSM efficiency, Transformer reasoning power, and MoE knowledge breadth. Its characteristics (52 billion total parameters with 12 billion active, 256k context) and ability to fit on a single 80GB GPU card became a vivid demonstration of the engineering future.

Moreover, the SSM philosophy is already extending beyond just language models. Analysis of recent publications shows that Mamba is becoming a new universal "LEGO block" for a wide variety of domains. For example, VG-Net uses it for change detection in satellite images, and Mamba-CNN combines it with convolutional networks for image analysis. This suggests that SSM is not just a replacement for Transformers, but a new fundamental component that will allow engineers to create incredibly efficient models for a wide range of tasks.

The Crossroads and the Price of Complexity

So, we stand at a crossroads. Before us are two clearly defined paths leading out from under the yoke of "The Tyranny of the Square." The first path, MoE, scales knowledge, creating a colossal encyclopedia model from an ensemble of narrow specialists who know everything in their field. The second path, SSM, scales context, allowing the model to remember and link together gigantic volumes of information without losing that very "Ariadne's thread." Both paths lead to linear computational complexity and open new horizons. But, as any engineer knows, there are no free lunches in our world. Everything has a price.

Behind every new elegant architecture lie its own "engineering demons" — practical problems that more than offset theoretical grace and that development teams "in the trenches" have to tame.

MoE's Pain: As we already mentioned, the main demon of this architecture is the gigantic VRAM footprint required for inference. Even if you use only 10% of the total number of experts to process a single token, you still have to load all 100% of the parameters into the GPU memory. This is like renting an entire business center with all its inhabitants just to call one specialist. Furthermore, MoE models are significantly more complex to train. The problem of load balancing arises: you need to ensure that the router doesn't send all requests to the same "popular" experts, leaving others idle. This requires complex algorithms and increases the overhead of communication between parts of the model.

SSM's Pain: On the other hand, SSM models are the "new kids on the block." Despite all their power and potential, as of September 2025, they do not yet have as extensive and mature an ecosystem as Transformers. For an engineer, this means fewer ready-made, optimized libraries, fewer ready-made recipes, and less accumulated community experience in solving typical problems. Implementing SSM is like building on a brand-new, ultra-efficient, but not yet fully understood composite material, while the entire industry has spent decades perfecting work with reinforced concrete.

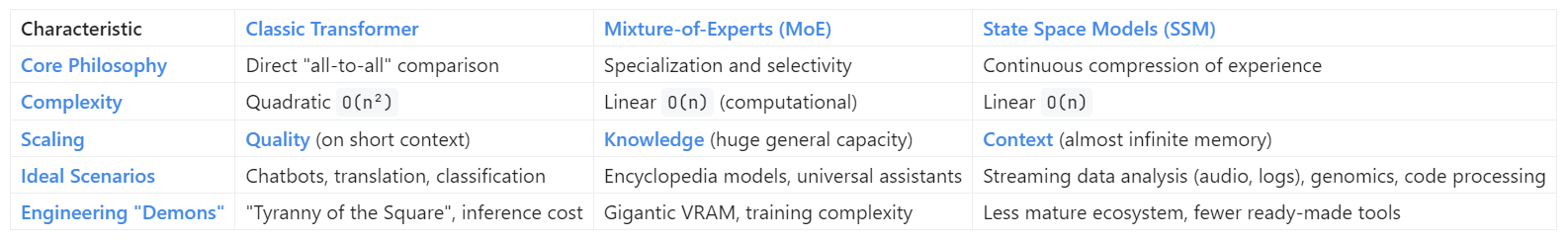

To clearly evaluate this crossroads and make an informed choice, let's summarize the key differences in one table.

Twilight of an Empire and a New Era of Modularity

We are witnessing not just an evolution, but the end of an era where a single architecture dominated everything. The Transformer was like a Roman aqueduct—an ingenious, monolithic structure that solved one grand problem and delivered "water" (attention) to all parts of the data empire. It was such a powerful and universal solution that for many years it became synonymous with the very idea of state-of-the-art AI. But we are no longer building aqueducts. Tasks have become more complex, more diverse, and the monolithic approach has exhausted itself, hitting a wall of economic and computational reality.

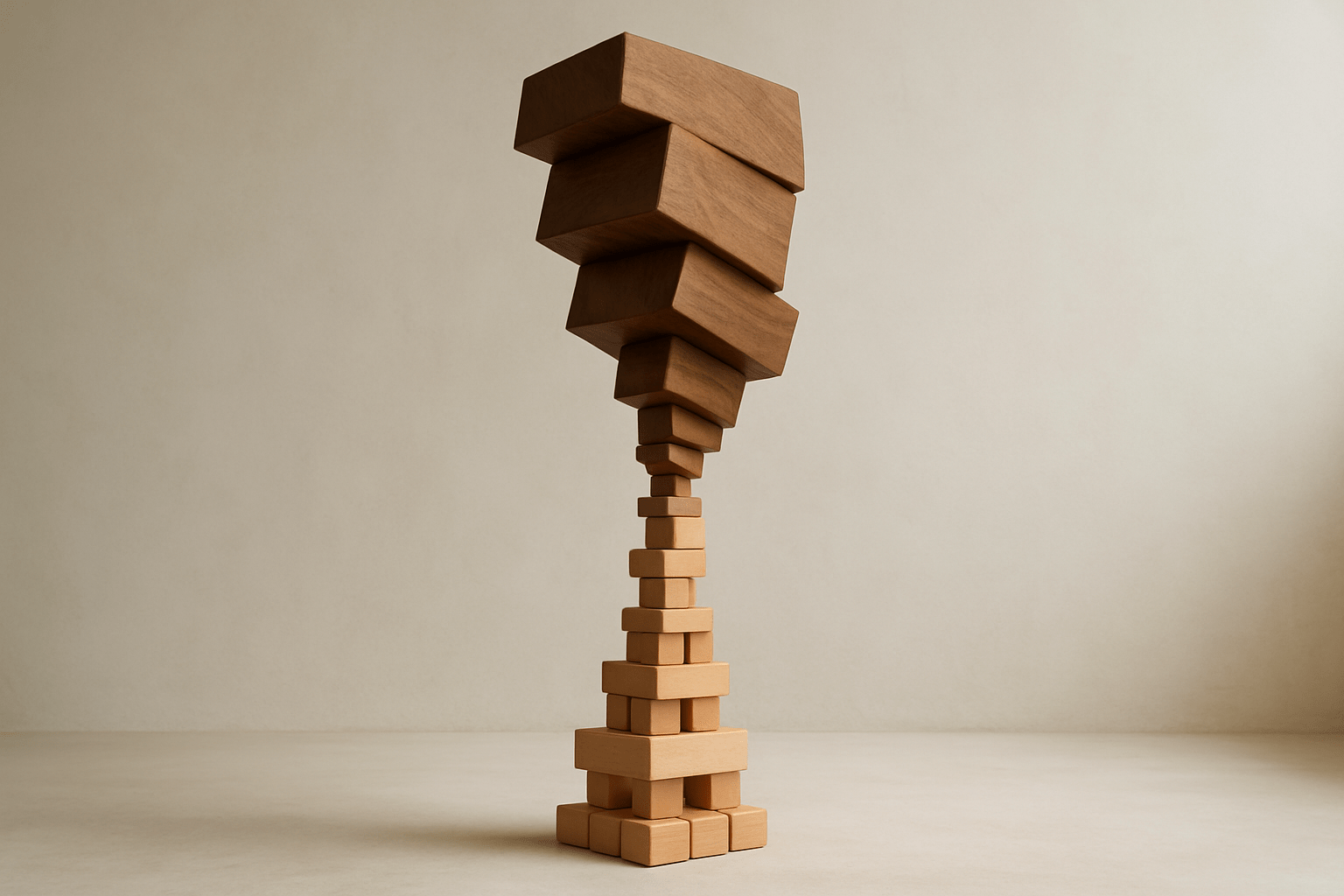

An era of modularity is dawning, where AI architects will, like with LEGO, assemble models from different fundamental blocks, tailoring the architecture to the specific task. The future of AI is not a new monolith, but a complex, heterogeneous system.

To continue the analogy, we are moving from building aqueducts to designing a modern city. In this city, there is a place for everything. There are towering skyscrapers (MoE) that allow a huge number of "residents" (knowledge) to be concentrated on a single patch of land. There are high-speed highways (SSM) that connect distant areas with linear efficiency, allowing information to travel vast distances without traffic jams. And, of course, there are cozy parks (classic attention) in this city—small spaces where local tasks can be solved with maximum quality and comfort.

No one in their right mind would build a city solely of skyscrapers or solely of highways. The city's strength lies in the synergy of its diverse parts. Likewise, the strength of future AI systems will not lie in choosing one "right" path, but in smart combining. The future belongs to hybrids that can combine the strengths of different approaches, creating architectures that simultaneously know a lot, remember for a long time, and reason accurately.

The Architect's Compass: Four Rules for a New Era

How then not to get lost at this crossroads, where the old empire has fallen, and new states are only forming? How should an AI systems architect make the right choice today? Instead of complex theory, I propose a simple compass — four heuristics, the quintessence of all our analysis, which will help you choose the right path.

- Rule of Maximum Quality: Does your task demand uncompromising accuracy on a short or medium context (say, up to 32 thousand tokens)? These are classic tasks like chatbots, classification, or translation. Don't rush to bury the old guard. The Transformer is still your king. Its ecosystem is the most mature, its tools are polished to a shine, and its quality over short distances is still the gold standard.

- Rule of Bottomless Knowledge: Do you need an encyclopedia model capable of answering highly diverse queries from different knowledge domains with high accuracy? Your choice is MoE. This architecture will give you access to a colossal volume of "passive" knowledge at relatively low (compared to gigantic monolithic models) inference costs. But be prepared to pay the price for this power—a high VRAM footprint.

- Rule of Infinite Memory: Is your task the analysis of long sequences, be it code, audio, time series, or server logs? Are you working with streaming data that has no clear end? Look towards SSM. Its linear complexity is not just an advantage; it is the only physically and economically sensible path to solving such problems.

- Rule of Hybrids: Is your task complex and multifaceted? Does it require deep knowledge, long context, and precise reasoning? Don't look for a "silver bullet" in a single architecture. Explore hybrids like Jamba. The future is about combining. It is at the intersection of different approaches that the most effective and groundbreaking solutions are born.

The Tyranny of the Square has been overthrown. The simple times when any problem could be solved by adding another legion to the Transformer army are over. We are entering an era of hybrid wars and asymmetric responses, where strategy and elegance of solution are more important than size. The old imperative "scale up" is dead. Are we ready for the new one—"combine"?

Stay curious.