AI

Basis

Map of the Artificial Intelligence World: A Guide to Reality-Changing Technologies

We live in the era of AI. These two letters follow us everywhere: from news headlines, marketing brochures, and descriptions of every second startup. But behind this short and already hackneyed acronym lies an entire universe of technologies: machine learning (ML), deep learning (DL), neural networks, natural language processing (NLP), computer vision (CV)... In this kaleidoscope of terms, it's easy to get lost even for a technical specialist, let alone everyone else. Hype mixes with reality, and possibilities with outright myths.

I propose to bring order to this knowledge. This article is your personal map and guide to the world of modern artificial intelligence. My goal is not just to list definitions, but to show how individual, seemingly disparate "tools" and "musicians" come together to form an incredibly powerful and well-coordinated "orchestra" capable of solving problems that seemed like science fiction just ten years ago. We will analyze not only "what" and "how," but also "why" and "at what cost." By the end of this journey, you should have a complete, structured picture of the AI world in your mind, allowing you to see through the technologies.

This article is for anyone who wants to stop confusing apples and oranges, or machine learning with the Terminator, and finally understand how the world of modern AI technologies is truly structured.

Laying the Foundation: AI, ML, DL — The Main Matryoshka Doll

Before building a skyscraper, you need to lay a strong foundation. In the world of AI, this foundation is a clear understanding of three key terms: AI, ML, and Deep Learning. You can compare them to a matryoshka doll. One concept is nested within another, and it's important not to confuse the largest doll with the smallest.

As I already wrote in my article "What "Artificial Intelligence" Really Is," the separation of reality and myths begins right here.

- AI (Artificial Intelligence) — This is the largest matryoshka doll. It is a general term for the entire scientific field whose goal is to make machines perform tasks requiring human intelligence. The concept of AI was born back in the 1950s and includes everything from rule-based systems (80s expert systems) to modern neural networks. If a machine plays chess, recognizes speech, or recommends a movie to you — it all falls under the umbrella term AI.

- ML (Machine Learning) — A smaller matryoshka doll, nested within AI. This is the main engine of modern AI. The key difference between ML and classical programming is that we don't write rigid instructions like "if A, then B." Instead, we create an algorithm and "feed" it a huge amount of data, on which it independently learns to find patterns. The system is not programmed, but trained. This is a fundamental paradigm shift.

- DL (Deep Learning) — The smallest, but also the most powerful matryoshka doll within ML. This is a subfield of machine learning that uses special architectures for training — deep neural networks (more on them later). It is thanks to breakthroughs in Deep Learning that we today see such wonders as photorealistic images from text descriptions, near-perfect real-time translation, and chatbots capable of meaningful dialogue.

So, let's remember: any Deep Learning is Machine Learning, and any Machine Learning is AI. But not vice versa. When someone says "we implemented AI," it's worth clarifying which matryoshka doll they are referring to. Most often, it will be ML, and at the cutting edge of progress — DL.

Recipe for Revolution: Three Ingredients That Changed Everything

A logical question: if the concept of AI has existed for 70 years, why did the "explosion" happen precisely in the last 10-15 years? The answer lies in a perfect storm — the confluence of three key factors that became the recipe for the current technological revolution.

- Ingredient #1: Big Data. Machine learning, as we have discovered, feeds on data. And in the 21st century, humanity began to generate this data on an industrial scale. Social networks, smartphones, IoT devices, digitized archives — all of this created unprecedented oceans of information. Data is the fuel for AI. Without it, even the most perfect algorithm is a powerful engine without a drop of gasoline.

- Ingredient #2: Computational Power (GPU). Deep learning requires colossal computations. Neural networks consist of millions (and now billions) of parameters that need to be constantly recalculated during training. And here, unexpectedly, the gaming industry came to the rescue. It turned out that graphics processing units (GPUs), designed for rendering complex 3D graphics, are ideally suited for the parallel computations required by neural networks. Their architecture, optimized for simultaneously performing thousands of simple operations (matrix multiplications), became the "muscles" that allowed AI models to grow in complexity and power.

- Ingredient #3: Algorithmic Breakthroughs. With fuel (data) and muscles (GPU), only an efficient "engine" was missing. And it appeared. Starting in 2012 (the AlexNet architecture, which won the ImageNet competition) and ending with the 2017 revolution (the "Attention Is All You Need" paper from Google, introducing the Transformer architecture), a series of fundamental breakthroughs occurred in the algorithms themselves. Transformers, in particular, became the game-changer for working with sequences (text, speech), underpinning all modern large language models like GPT-4 and Claude.

These three components — data, hardware, and algorithms — created the synergy that brought us to today.

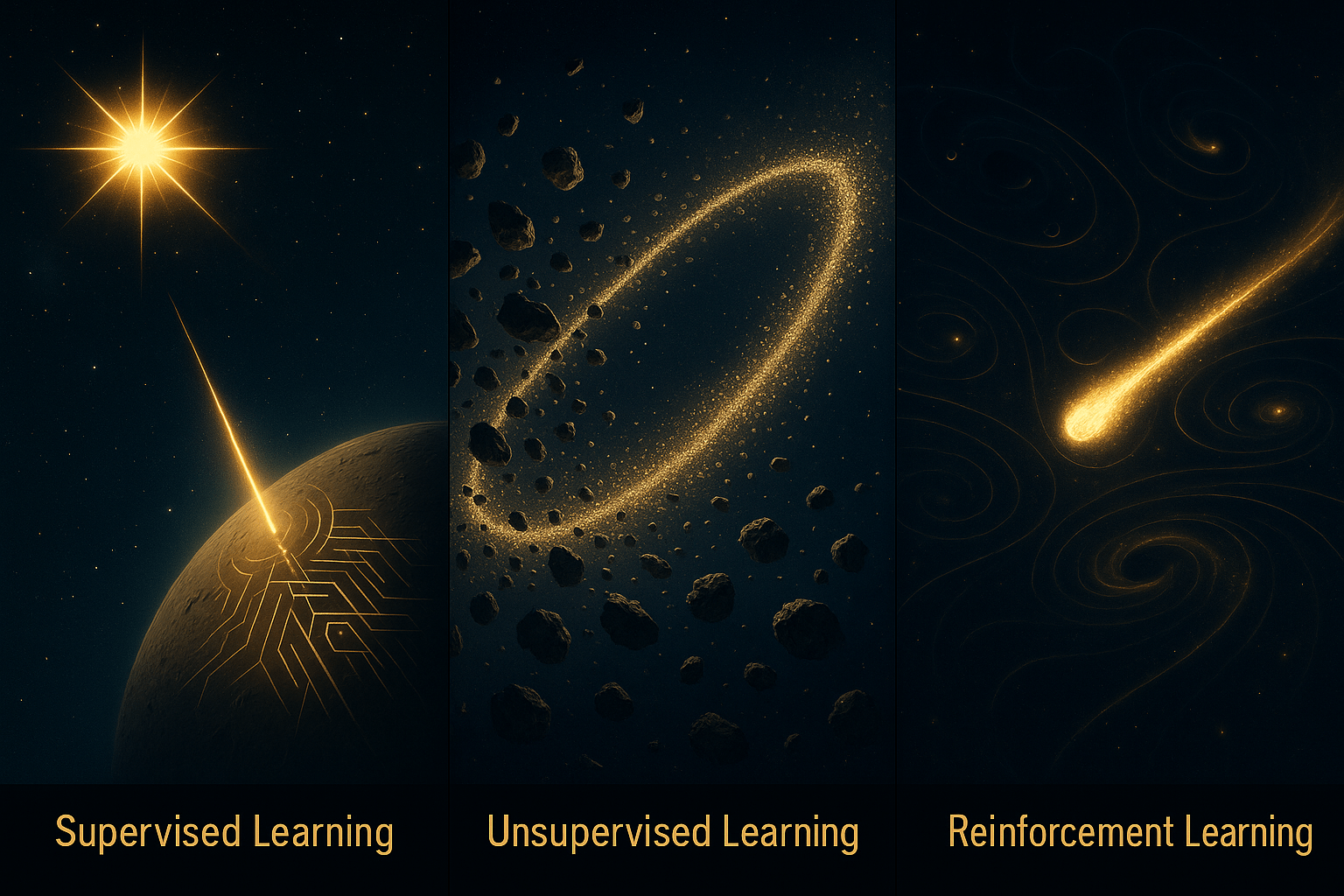

Three Ways of Learning: How Our Orchestra "Rehearses"

So, we have an "orchestra" (a model), and we want to teach it to "play" well. In machine learning, there are three main approaches to this "learning," three different pedagogical methods.

- Supervised Learning. This is the most common and straightforward method. Imagine we give our "musician" a musical score where every note is already labeled and checked. In the world of data, this means we have a labeled dataset: thousands of images tagged "cat" or "dog," millions of emails marked "spam" or "not spam." The model looks at the input data (image) and the correct answer (label), and then tries to adjust its internal parameters so that its own answer matches the "correct" one. It repeats this millions of times until it learns to do it with high accuracy.

- Unsupervised Learning. But what if we don't have a "score"? What if we have a huge amount of data, but no one has labeled it? This is where unsupervised learning comes into play. The analogy with an orchestra: musicians simply gather together and start improvising, listening to each other. Gradually, they themselves, without a conductor, find harmonious combinations and group themselves by sound. In practice, this means that the algorithm itself searches for hidden structures and patterns in unlabeled data. A classic example is clustering: the system can automatically group your customers by purchasing behavior, even if you didn't initially set any criteria.

- Reinforcement Learning. This is the most "human-like" way of learning, based on trial and error. Imagine a conductor who doesn't give a score, but simply says "good" or "bad" after each played fragment. The algorithm (or "agent") performs an action in a certain environment (e.g., makes a move in a game) and receives a "reward" or "penalty" in return. Its goal is to maximize the final reward. This is how AlphaGo from DeepMind, which defeated the world champion in Go, was trained. It played millions of games against itself, gradually developing winning strategies.

The Brain of the System: What Are Neural Networks?

We have already mentioned neural networks several times as the basis for Deep Learning. Let's look under the hood and understand what they are, intuitively.

Neural Networks are computational models inspired by the structure of the human brain. They consist of many interconnected "neurons" organized into layers. Each neuron receives signals from neurons in the previous layer, performs a simple mathematical transformation on them, and passes the result further.

- Input layer receives raw data (e.g., image pixels).

- Hidden layers (there can be from one to hundreds) perform the main "magic," finding increasingly complex and abstract patterns.

- Output layer produces the final result (e.g., the probability that an image contains a cat).

"Deep Learning" means using networks with a large number (depth) of hidden layers.

Among the wide variety of neural network architectures, one stands out that is responsible for the boom in generative AI:

Generative Adversarial Networks (GANs). This is an ingenious concept that can be described as a "creative duo" of two neural networks.

- Generator: This network is the "forger." Its task is to create new data indistinguishable from real data (e.g., drawing faces of people who never existed).

- Discriminator: This network is the "forensic expert." Its task is to look at an image (real or generated) and determine whether it's a fake or not.

They train together in a constant struggle: the Generator tries to deceive the Discriminator, and the Discriminator tries to catch it in a lie. As a result of this competition, both networks become incredibly good at their tasks. It was GANs that for a long time were the main technology for generating photorealistic images.

The Orchestra Assembled: Technologies for Solving Real-World Problems

Now that we have all the components, let's see how our "orchestra" performs specific pieces. For a long time, different sections of the orchestra — "strings" for text, "winds" for images — played according to their own scores. But the main trend of 2025 is multimodality. Models are learning to understand the world in its entirety, simultaneously processing text, images, audio, and even video. This is no longer just separate sections, but a single, well-coordinated collective, capable, for example, of looking at a picture and describing it in words, or generating a video from an audio track.

This is not just a technological gimmick, but a key growth driver for the entire industry. According to a report by Precedence Research, the global multimodal AI market, valued at $2.51 billion in 2025, is projected to grow almost 17 times to reach $42.38 billion by 2034. Technically, this is achieved through complex architectural solutions, where, for example, hierarchical features are extracted from images and "woven" into the language model through cross-attention mechanisms. Meta's MLLaMA model, which uses a 32-layer visual encoder, is a prime example.

Let's look at the key "sections" of this multimodal orchestra.

The String Section (working with language)

- NLP (Natural Language Processing): This is the general term for the entire group of technologies that allow computers to work with human language. These are the "violins, violas, and cellos" of our orchestra.

- ASR (Automatic Speech Recognition) / TTS (Text-to-Speech): These are the "ears" and "voice" of the system. ASR converts your speech into text (hello, Siri and Alice!), and TTS, conversely, vocalizes text.

- NLU (Natural Language Understanding) / NLG (Natural Language Generation): This is the "brain" of the language section. NLU is responsible for understanding the meaning of what is said, extracting entities and intentions. NLG, in turn, is responsible for formulating meaningful and grammatically correct sentences.

Practical Example: In March 2025, VK introduced the AI Persona service. It uses large language models to create detailed audience portraits for marketers. The system combines anonymized data from VK services with customer data and, using NLU, analyzes the interests and psychographics of segments. Then, with the help of NLG, it generates personalized advertising messages for each segment. This is a perfect example of the synergy of NLP and big data to solve a specific business problem.

The Wind Section (working with visual information)

- CV (Computer Vision): An umbrella term for image and video analysis technologies. The "trumpets, trombones, and flutes" of our orchestra.

- OCR (Optical Character Recognition): A specific and very old CV task — "reading" text from images, scans, or photographs. When you scan a document and get editable text, that's OCR at work.

- Image Generation: The most fashionable part of CV today. Models like Midjourney, DALL-E, and Stable Diffusion, which "draw" pictures based on your text description.

The Conductor (Data Scientist)

And above all this splendor stands a human — the Data Scientist or ML Engineer. This is the "conductor" who selects the right instruments (models and architectures), chooses the repertoire for them (data), conducts rehearsals (training), and analyzes the final sound, deciding how to make it even better. Without this specialist, even the most powerful orchestra will play out of tune and off-beat.

Economics of Giants: The Double Standards of AI

We admire the capabilities of GPT-4 or Claude 3, but rarely think about their economics. And it is fundamentally changing the IT landscape and operates on the principle of double standards: astronomically expensive training and rapidly cheapening usage.

- Training Cost: Training one large foundational model is a colossal expense. Estimates for GPT-4's training cost range from $60 to $100+ million dollars, and that's just for computational resources. This reality has given rise to the paradigm of "foundational models": giants (OpenAI/Microsoft, Google, Anthropic) create universal "power stations," and others connect their "appliances" to them via API.

- "Computational Poverty" and Architectural Breakthroughs: It would seem that this dooms everyone else to "computational poverty," leaving no chance for startups and academia. But here, architectural ingenuity comes into play. The most striking example in 2025 is the Chinese model DeepSeek R1. According to analysis by Bruegel, it was trained for only $5.6 million — dozens of times cheaper than Western counterparts (though some sources claim $1.3 billion was spent). The secret lies in the Mixture-of-Experts (MoE) architecture, which activates only the necessary parts of the model for a specific task, making it incredibly efficient. In the article "MiniMax-M1: Dissecting an Architecture that Breaks Scaling Laws," I discussed an architecture that allows models to truly think for a long time and work with gigantic amounts of data. As a result, its API cost is 93% lower than competitors, with comparable quality on many tasks. This proves that clever architecture can be more important than brute computational force.

- Plummeting Usage (Inference) Cost: And here is the second standard of this economy. While training is the domain of the elite, using models is becoming increasingly accessible. According to the AI Index Report 2025 from Stanford, the inference cost for a GPT-3.5 level system dropped by 280 times in two years (from 2022 to 2024). This was made possible by algorithm optimization, increased hardware energy efficiency (40% annually), and the development of more compact, yet powerful, open-source models that have nearly caught up in quality to their closed counterparts.

- Global and Local Ecosystems: This economy gives rise not only to global giants but also to powerful local players. Russian companies are actively building their own foundational models. In 2025, Yandex introduced YandexGPT with support for up to 32,000 tokens of context, a batch mode for processing large data volumes, and launched a Bug Bounty program for its AI models. Sber demonstrates impressive ROI in AI: according to China Daily, every ruble invested brings seven. Their GigaChat and Kandinsky models are available as open-source and receive international recognition, and Russia and China are strengthening cooperation in the field of AI.

Tuning and Upgrade: Advanced Concepts

How can we make our already trained "orchestra" even more virtuosic, without retraining it from scratch for hundreds of millions of dollars? There are several elegant techniques for this.

- Transfer Learning: This is arguably the most important concept for practical AI application. Instead of training a model from scratch, we take a pre-trained model on a gigantic dataset (e.g., the entire internet) and only slightly "fine-tune" it on our smaller, specific dataset. Analogy: we take an experienced virtuoso pianist and quickly teach them to play a new, specific melody. They don't need to re-learn music theory or finger placement. This saves 99% of time and resources.

- Edge AI: Traditionally, computations occur in the cloud. But sometimes, a model needs to work quickly, autonomously, and without sending data over the internet. This is Edge AI. The model is optimized to run directly on your smartphone, in a camera, or in a car. It's like allowing the "musician" to play a solo right in your home, without connecting to a large concert hall. A classic example is Face ID on the iPhone. All processing happens on the device, ensuring speed and privacy.

- Federated Learning: A surprisingly elegant idea that has transformed from an experimental technology into a recognized standard. How can a model be improved for everyone without collecting anyone's personal data? In June 2025, the European Data Protection Supervisor (EDPS) issued an official document recognizing federated learning as fully compatible with GDPR and the privacy-by-design principle. The essence of the method: each "musician" (smartphone) locally trains its copy of the model on its own data. Then, it sends to the "center" not the data itself, but only an anonymous "update" — a small summary of what it has learned. The central model aggregates thousands of such updates and becomes better for everyone. Studies show that this approach provides model accuracy at 91.2%, increases task success by 7.6%, and reduces data breach risks by 41.5% compared to centralized training.

Conclusion

I hope that after this journey, the map of the AI world has become much clearer for you. We didn't just go through terms; we saw how basic concepts (AI, ML, DL) give rise to learning methods (supervised, unsupervised, reinforcement), which are embodied in specific architectures (neural networks, GANs) and technologies (NLP, CV). We saw how this technological pyramid rests on a foundation of data, hardware, and algorithms, and understood what economic forces are shaping today's landscape.

The main conclusion I want you to take away is this: artificial intelligence is not magic. It is the result of decades of scientific work and the recent perfect confluence of technological, algorithmic, and economic factors. Understanding this entire "map" allows you to see not only the hype and possibilities, but also the real balance of power, limitations, and the cost of progress. We see a world where multimodality is becoming the norm, where the cost of training and using models operates under different laws, and where powerful national AI ecosystems are emerging alongside global giants.

Now, knowing not only "what," but also "why" and "at what cost," you can much more consciously evaluate news and products from the world of AI. Try an experiment: look at your smartphone right now. Which of these technologies are already working for you? Face recognition, text input suggestions, smart photo sorting, voice assistant... The orchestra is already playing. And now you know what instruments it consists of.

Stay curious.