AI

LLM

Selfhosting

Local AI: The Pragmatic Guide to Running LLMs on Your Own Hardware

If you've ever worked with cloud LLMs, you've probably experienced that strange feeling: you seem to be creating something of your own, but in fact, you remain a guest in someone else's house. You can compare it to ordering food from a trendy restaurant: incredibly convenient, fast, and usually delicious, but you don't control the quality of the ingredients or the cooking process. Running an LLM on your own hardware, in my understanding, is a transition from being a guest to owning your own, professionally equipped "AI kitchen." I'll say right away: this is not a "silver bullet" or a universal solution for everyone. But for certain tasks and specific groups of users, it becomes not just preferable, but the only correct one.

After analyzing dozens of community discussions and technical reports, I have concluded that in 2025, local AI is primarily a tool for those who value sovereignty, control, and efficiency. To understand for whom this game is truly worth the candle, I have divided all adherents of local deployment into four large groups.

The first and most obvious group is, of course, developers and IT specialists. For us, a local LLM is not a toy, but a powerful working tool, another utility in our arsenal. According to discussions, models tailored for code, such as Qwen3-Coder or DeepSeek Coder, are used for quickly writing boilerplate, debugging complex sections, prototyping new features, and vibe coding. And all this—without monthly bills for every token sent and without dependence on an external API. This not only saves money but also makes the workflow more flexible, predictable, and, importantly, faster.

The second group, which I believe is the main driving force behind this entire movement, is enthusiasts and researchers. I often spend time on the r/LocalLLaMA subreddit myself, and it's a true melting pot of ideas. These people are not just passive consumers of technology. They actively participate in creating the ecosystem: experimenting with new models, studying their architectures, and fine-tuning them for their unique, sometimes completely unexpected needs. It is thanks to their curiosity and persistence that we get detailed performance tests, guides, and new applications for AI.

The third category, whose importance in a world of pervasive data leaks grows daily, is privacy-obsessed businesses. Think of lawyers working with confidential contracts, doctors analyzing medical histories, or corporate R&D departments creating breakthrough technologies. For them, transmitting sensitive information to cloud APIs is an unacceptable risk, both from a security and legal standpoint, and from a reputational perspective. Local deployment provides them with a hundred percent guarantee that trade secrets or personal data will never leave the company's secure internal perimeter.

Finally, there is another, no less important group—users "off the grid". I am talking about people working in expeditions, at remote sites, or simply in regions with unstable and expensive internet connections. For them, access to cloud services is a luxury, not a given. Local AI becomes for them not a matter of convenience, but a critically important technology, providing access to modern tools in all conditions.

If you recognize yourself in one of these portraits, or are simply curious about the state of this rapidly developing industry, this article is for you. Let's build your personal "AI kitchen."

Anatomy of Local AI: Technologies, Formats, and Heroes

How is it that huge, multi-billion parameter models, which just yesterday required the power of an entire data center, now fit under our desks? To answer this question, we need to understand the anatomy of this local revolution. I have analyzed the technological basis underlying it and identified three key aspects: the magic of compression, the role of community titans, and the hidden architectural pitfalls that many newcomers stumble upon.

The Magic of Compression and the GGUF Format

Let's start with the main point. The original models born in Google or Meta labs are true giants. They weigh hundreds of gigabytes and store their parameters (weights) in high-precision 16-bit floating-point formats (FP16). Trying to load such a monster into the memory of even the most powerful consumer graphics card with 24 GB of VRAM is like trying to pour water from the ocean into an aquarium.

The solution to this problem was quantization—a key model "compression" method that underlies all local AI. To explain this concept, one can use an analogy from the audio world. Imagine that the original FP16 model is a studio-quality FLAC audio recording. It's flawless but takes up an enormous amount of space. Quantization (for example, to a 4-bit representation) is like smartly compressing that track into a high-quality 320 kbps MP3. Yes, some subtle nuances that only a professional sound engineer on reference equipment would hear are lost. But for 99% of listeners, there's no difference, and the file weighs many times less. The same thing happens with the model: we sacrifice a little mathematical precision of the weights, but we get a huge reduction in memory requirements, with almost no loss in answer quality. You can learn about quantization types here.

But simply compressing the model is not enough. A universal "container" was needed that would allow this slimmed-down model to work efficiently on a hybrid of CPU and GPU, which is a typical configuration for a home PC. This de facto standard became the GGUF format (GPT-Generated Unified Format). This is not just a file with model weights. It is a complete "boot package" that contains everything necessary: the quantized weights themselves, the tokenizer dictionary for converting text to numbers and back, and important metadata about the structure and parameters. Its architecture is designed to efficiently use both fast video memory (VRAM) and slower system RAM, allowing for flexible load distribution.

Titans of the Community

After analyzing dozens of repositories on Hugging Face, I understood one important thing: this entire ecosystem lives and breathes thanks to the efforts of enthusiasts. As an end-user, you don't need to be an expert in model compilation or have access to an H100 cluster. Most likely, you will download a ready-made, perfectly optimized GGUF version from contributors like bartowski, who do a tremendous job of skillfully "packaging" original models into user-friendly files.

And there are entire teams, such as Unsloth, who go even further. They don't just package models; they use an advanced quantization method to optimize the training and inference processes to the limit. The key feature of Unsloth Dynamic 2.0 Quants lies in its "dynamic" approach. Instead of compressing the entire model uniformly, Dynamic 2.0 analyzes each of its layers and intelligently selects the best method and level of quantization for it. Some layers can be compressed more, while layers more critical for model performance retain higher precision. This is the true spirit of open-source in action, where the community makes cutting-edge technologies accessible to everyone.

Hidden Bottlenecks

However, despite all the magic of optimization, there are non-obvious factors that can become the "bottleneck" for your system. The main one is context length (or context window size). Imagine that your graphics card's memory (VRAM) is the area of your desktop. The model itself is a set of folders with documents on it. And the context is the number of folders you keep open simultaneously for work. The larger the context (the longer your conversation with the chatbot), the more "open folders" and the more space they occupy on the desk, i.e., in VRAM.

This leads to local LLM performance often being limited not by pure GPU computational power, but by bandwidth and memory size. This is why overall system balance is so crucial for the efficient operation of GGUF models. Fast RAM (DDR5 will be noticeably better than DDR4) and a multi-core CPU become critically important, as part of the data processing load inevitably falls on them, especially when the model does not fit entirely into VRAM and starts using system memory.

Assembling Your AI Workstation

We've covered the theory and understood that VRAM is the new oil, and system balance is key to success. Now let's move on to the most exciting part—assembling the hardware. I believe that choosing components for local AI isn't just a race for the highest clock speeds and teraflops, but a delicate engineering calculation where video memory capacity often outweighs raw speed. Let's look at current builds, based on different budgets and goals.

Before we dive into specific graphics card models, let's establish the main principle: when building an AI workstation, the primary currency is not teraflops, but gigabytes of video memory (VRAM). It is the amount of VRAM that determines what size models you can comfortably run, while GPU speed affects how quickly you receive an answer (tokens per second).

Level 1: "Apple Ecosystem" — Mac with M2/M3/M4 (24 GB+ Unified Memory)

- For whom: Beginners and professionals already working in the Apple ecosystem. This is, without exaggeration, the ideal "out-of-the-box" start. The main advantage of Macs with Apple Silicon chips is unified memory. CPU and GPU have direct access to a shared pool of ultra-fast RAM, which eliminates traditional "bottlenecks." Thanks to this and the MLX framework, optimized for this architecture, you get an incredibly smooth and seamless experience. You don't need to bother with drivers and settings—everything just works. According to community tests, machines with 24 GB or more unified memory handle most popular models very well.

Level 2: "People's Entry" — Nvidia GeForce RTX 3060 12GB

- For whom: Enthusiasts on a limited budget. This graphics card is a true phenomenon. Despite its age, it remains the best ticket into the world of local AI thanks to its 12 GB of VRAM. On the secondary market, it can be found in the range of 17-20 thousand rubles, giving it the best "price/gigabyte of video memory" ratio. This amount is sufficient for comfortable work with models up to 13 billion parameters, covering a huge range of tasks. I started with one myself and, like many in the community, consider it a more sensible purchase for AI than, for example, the newer RTX 5060, which only has 8 GB of memory on board. Also, if you don't want to bother with used options, you can consider an RTX 5060 with 16GB VRAM, with a budget of 45-50 thousand rubles.

Level 3: "Enthusiast's Gold Standard" — Nvidia GeForce RTX 3090 / 3090 Ti 24GB

- For whom: Serious hobbyists and researchers seeking the ideal balance of price and capabilities. Here we come to the most interesting option. The RTX 3090 is a true "workhorse" for local AI. Yes, in games it lags behind newer generations, but its 24 GB of VRAM is that "gold standard" that opens the door to the world of large models (up to 70B with good quantization). For 70-80 thousand rubles on the secondary market, you get a card that, as users on Reddit rightly point out, "will last another 5+ years," allowing you to experiment with heavy models and long contexts without compromise.

Level 4: "Beyond a Single Card" — Modern GPUs and Custom Rigs

- For whom: Enthusiasts who accept no compromises. Of course, modern cards like the RTX 5070 Ti (16 GB) or the flagship RTX 5090 (32 GB) offer colossal performance. But, as I said, for LLMs, VRAM capacity is often more important than speed. However, when it comes to top-tier builds, balance is key. According to enthusiast reports, a system with 96 GB of fast RAM and an RTX 5080 is capable of delivering 25 tokens per second on a Qwen3-Coder 30B model with a gigantic context of 256 thousand tokens. This once again proves that a powerful GPU must be supported by equally powerful CPU and RAM. You can also build a rig from several graphics cards, for example, one like this or this one.

Level 5: "Commercial Sector" — NVIDIA H100/H200

- For whom: Companies and research centers. This is a different league. Nvidia H100 and H200 are the gold standard for training and inference in data centers. With 80/141 GB of ultra-fast HBM3 memory, they are the number one choice for OpenAI, Anthropic, and all major cloud providers. I mention them here only to show the scale: what we do locally is a miniature copy of the processes happening in these computing monsters.

Level 6: "New Architectures" — Groq and Cerebras

- For whom: A look into the future of AI inference. Lately, I've been seeing more and more how GPU dominance is being challenged by companies with fundamentally different architectures. Groq (not to be confused with Grok by Elon Musk) with its LPU (Language Processing Unit) processor was created for one purpose—to execute language models with the lowest possible latency. Its streaming architecture, similar to a perfectly tuned pipeline, provides record-breaking speed in tokens/sec, which is ideal for chatbots and real-time applications. Cerebras, in turn, went even further by creating the WSE (Wafer-Scale Engine)—a gigantic chip the size of an entire silicon wafer. This eliminates the "bottlenecks" of GPU clusters, as a huge model can fit on a single chip. While these are currently mainly solutions for training foundational models, they clearly show that the future of AI hardware can be very diverse.

According to Openrouter, when using the qwen3-32b model, the Groq provider ensures a speed of over 600 tokens/sec, Cerebras over 1400 tokens/sec, compared to the usual speed of 50-80 tokens/sec.

Choosing Software. From a Simple Click to Full Control

So, our "AI kitchen" is assembled, the hardware is humming in anticipation. Now it's time to choose the main tool—the software that will "cook" our neural network dishes. After analyzing the current state of the ecosystem, I can confidently say: in 2025, the choice has become incredibly rich. Gone are the days when launching a model required manually compiling code and dealing with dozens of dependencies. Today, there are tools for every taste—from elegant applications where everything is done with a single click, to powerful command-line utilities for full control. Your choice will only depend on how deeply you want to dive into the process.

For Beginners and Quick Start (GUI)

If you want to get results here and now, without delving into technical intricacies, your choice is applications with a graphical user interface (GUI). They transform a complex process into an intuitive and user-friendly one.

- LM Studio: I often recommend it to my friends because it's essentially an "LLM browser and manager." It's ideal for non-technical users. Inside, you'll find a convenient search for models from Hugging Face, you can download your favorite with one click, and immediately run it in a chat window. But its main strength is a built-in local API server, compatible with the OpenAI API. This allows you to easily integrate a local model into your own scripts or third-party applications. And LM Studio is also a smart program: it identifies your graphics card and, when selecting a model, provides direct hints on whether the model will fit entirely into fast video memory.

- Jan: I would call this tool the main ideological competitor to LM Studio. It offers similar functionality, but with one key difference—Jan is a fully open-source project. For those who value transparency, the ability to look "under the hood," and support the open-source community, this will be a decisive factor.

- GPT4All: This project takes a different approach. Instead of giving you access to all the models in the world, its team offers a carefully curated and tested list of models that are guaranteed to work well on typical consumer hardware. If you don't want to spend time reading reviews and comparing quantizations, but simply need a ready-made and reliable solution, GPT4All is an excellent choice.

For Developers and Advanced Users (CLI)

If you are a developer, researcher, or simply like to keep everything under control, your path lies in the world of command-line interface (CLI) tools.

- Ollama: I believe this tool has truly revolutionized deployment simplicity. Just one command in the terminal—

ollama run llama3—and Ollama will download, configure, and launch the latest version of Llama 3, bringing up a local API. This is an ideal solution for developers who need to quickly integrate an LLM into their workflow without being distracted by environment setup. In July 2025, the developers released an official desktop application for macOS and Windows. - Llama.cpp: This is not just an application, it is a fundamental technology. Llama.cpp is a high-performance library written in C/C++ that serves as the "engine" for many other programs, including LM Studio and Ollama. Working with it directly provides maximum performance and flexibility. If you need to squeeze every ounce of performance from your hardware, configure every inference parameter, and achieve minimal latency—Llama.cpp is definitely your choice.

- text-generation-webui (Oobabooga): If Llama.cpp is the engine, then Oobabooga is the dashboard of a racing car. It is a powerful web interface that provides access to hundreds of settings for experiments, chat, and even fine-tuning models. One of its killer features is the ability to flexibly distribute model layers between video memory, RAM, and even disk, allowing you to run gigantic models that seemingly cannot fit into your system.

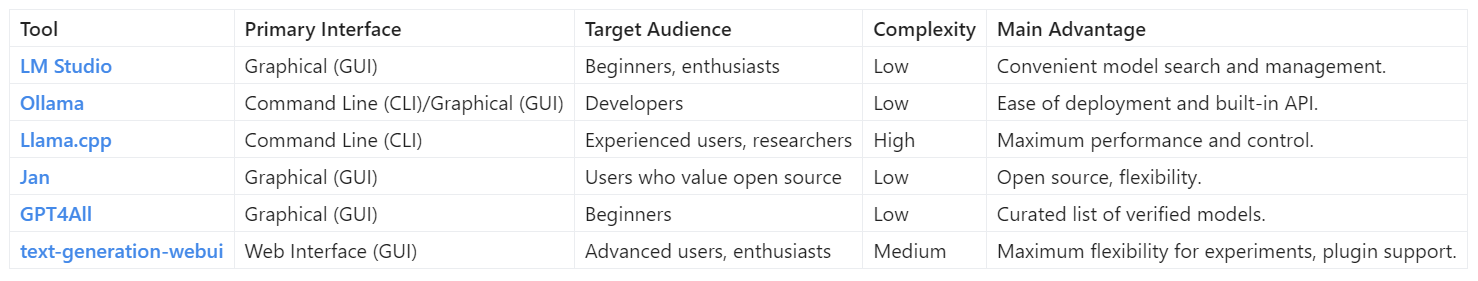

To make it easier for you to navigate this diversity, I have summarized the key characteristics in a simple table:

Your First Launch: Model Recommendations

So, you've chosen your tool from the list above—be it the convenient LM Studio or the Spartan Ollama. Now for the most exciting moment: what to launch first? The world of open-source models is vast, and to keep you from getting lost, here's my personal short-list—three models that perfectly illustrate the capabilities of local AI and work well on various hardware.

- Qwen3-4B: Two 4-billion parameter models from Alibaba with the same architecture but different approaches.

- Instruct-2507 is a "fast and obedient generalist" focused on precise instruction following and concise answers. It doesn't show the reasoning process but immediately provides a result, which is ideal for general tasks.

- Thinking-2507 is a "thoughtful problem solver." This version elaborates on its logical chain before answering, making the process transparent. It is excellent for programming, mathematics, and complex analytical tasks where the reasoning process is important.

- Llama-3.1-8B-Instruct: This is a beautifully balanced generalist that excels at a wide range of tasks: from answering questions and summarizing text to creative work and complex dialogues. Thanks to high-quality human feedback (RLHF), the model understands user queries well and generates quality content.

- Gemma-3n (E2B/E4B): A family of models built on Gemini technologies and specifically optimized for devices with limited resources, such as laptops. The E2B and E4B designations indicate an "effective" size of 2 and 4 billion parameters, achieved through a special MatFormer architecture. Originally, the models are multimodal (can work with text, images, and audio), but popular GGUF versions are currently limited to text. This is an excellent choice for less powerful hardware and experiments.

Tools and Community. Your Helpers

You've chosen your hardware, decided on the software, selected a model, and are just about ready for your first launch. At this stage, the journey into the world of local AI might seem complex and a bit lonely. But I hasten to assure you: you are not alone on this journey. Over the past few years, an entire ecosystem of resources, tools, and most importantly, people ready to help, has grown around this technology. Let me show you a map of this ecosystem.

Where to Find Help and Like-Minded People

If I had to name one single place that is the heart and brain of the entire movement, I would unhesitatingly choose the subreddit r/LocalLLaMA. After analyzing hundreds of threads, I can confidently say that this is not just a forum. It is the main "hub," the nerve center of the community. This is where the latest news about new model releases appears, detailed guides for setting up complex software are published, and performance test results on different hardware are shared. If you encounter a problem that seems insurmountable, be it a mysterious compilation error or unexpectedly slow generation speed, just ask. With high probability, someone has already encountered something similar and is ready to share a solution.

Navigators in the World of Tools

The local AI ecosystem is growing so fast that the number of programs, frameworks, and utilities can be dizzying even for an experienced specialist. To avoid drowning in this diversity, the community has come up with an elegant solution—curated lists, known as "Awesome Lists." I strongly recommend bookmarking repositories like awesome-local-llms or Awesome-local-LLM. Consider them your compass in the world of local AI software. These lists are constantly updated and contain links to everything—from GUI launchers to niche libraries, helping you quickly find the right tool and avoid spending hours on independent searches.

Practical Assistants

Besides global resources, there are many smaller but incredibly useful utilities that simplify the daily life of an AI enthusiast.

- VRAM Calculators: These are your main assistants during the model selection stage. Before downloading a file weighing tens of gigabytes, just visit one of the online calculators (for example, this one or this one). By entering the model parameters (number of parameters, quantization type), you will instantly get an estimate of the required video memory. This avoids the frustrating situation where you downloaded a model only to realize it doesn't fit into your graphics card.

- Hugging Face Hints: The community and model developers do everything to save us from guesswork. On the pages of many popular models on Hugging Face, there is a special "Hardware compatibility" block. It explicitly shows which quantization version (e.g., Q4_K_M) is best suited for which VRAM size (8GB, 12GB, 24GB).

- Interactive Guides in Software: The launch software we discussed in the previous section goes even further. LM Studio, for example, automatically detects your GPU and, when you select a model from the list, provides direct and clear hints. Seeing the message "Full GPU Offload Possible," you can be 100% sure that this model will run as fast as possible, fully loaded into fast video memory. This is a small but very important detail that makes the technology more accessible to everyone.

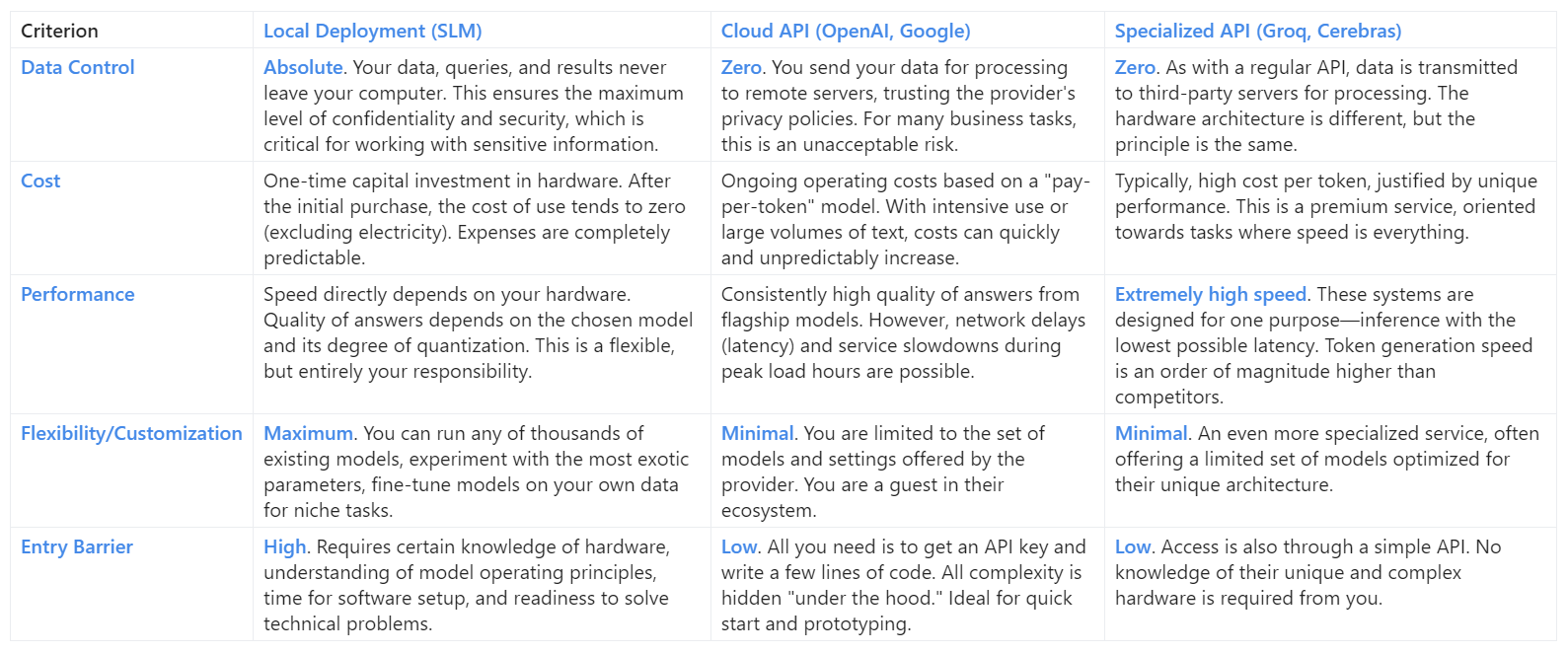

Local or API: An Honest Comparison

We've assembled the hardware, chosen the software, found like-minded people, and armed ourselves with useful utilities. All the pieces of the puzzle seem to be in place. But before we look to the future, we need to pause and conduct an honest, unemotional analysis. It's time to answer the main question: in what situation is our painstakingly assembled "AI kitchen" truly better than ordering food from a restaurant in the form of a cloud API?

I have spent a lot of time working with both local models and various APIs, and I have come to the conclusion that there is no single answer to "what's better?" It's always a compromise, a weighing of priorities. To help you make your own informed decision, I have compiled all the key differences into a single comparative table. In it, we will pit three approaches against each other: our local deployment, a standard cloud API from giants like Google (Gemini 2.5 Pro), and a new generation of highly specialized APIs, exemplified by Groq.

As you can see, the choice boils down to a simple question: what is more important to you? If your priority is absolute data sovereignty, complete predictability of costs, and boundless freedom for experimentation, then local deployment is your only path. If you need quick access to the most powerful model here and now, without initial investment and setup headaches, then cloud APIs remain unrivaled. And if your task requires lightning-fast reaction, for example, in real-time applications, then new, powerful players like Groq or Cerebras are appearing on the horizon.

The Next Frontier. AI in Your Pocket

Having covered this entire journey—from choosing hardware to launching a model on your own smartphone—I want you to pause for a second and realize: what sits under your desk is not just a powerful computer. It's a miniature, personal version of an OpenAI or Google DeepMind research lab. This is how I see the essence of local AI. It's not just a technology; it's the biggest, most accessible, and most exciting "sandbox" for innovation since the advent of the mass internet. It's an opportunity for every enthusiast, developer, and researcher to directly touch the technologies that are changing the world right now, and to start experimenting without regard for API limits, budgets, or corporate roadmaps.

The history of technology has proven many times: real breakthroughs often happen not in the sterile labs of mega-corporations, but in those very "garage" conditions, where passion and curiosity are more important than multi-million dollar investments. The next "ChatGPT" might not be created by a team of hundreds of engineers, but by a single student in a dormitory who, out of boredom, fine-tuned a local model to solve a unique, previously unnoticed problem. Local AI returns this opportunity to our hands. It democratizes access to the most powerful tool of our time.

In this article, I tried to give you a map and a compass: we discussed current hardware, explored software for every taste, and found where to seek help from like-minded people. Now it's your turn. Start experimenting. Download your first model. Try to make it write code, a poem, or a plan for world domination. Break something. Fix it. Create something new, something of your own. The possibilities that open up before you are limited only by your imagination.

Cloud giants are building magnificent "cathedrals" of artificial intelligence, access to which is strictly regulated. The local AI community gives each of us bricks and a trowel to build our own.

Start building today and stay curious.