AI

LLM

AI Scientists Are Here: A Grand Tour of the LLMs Changing Fundamental Science

We live in an amazing time. Right before our eyes, a fundamental shift is happening in the scientific method itself—a shift comparable in significance to the invention of the microscope, which opened up the invisible world, or the advent of the computer, which gave us the power of computation. Artificial intelligence is rapidly ceasing to be merely a tool, an obedient calculator in the hands of a researcher, and transforming into something more—a full-fledged partner in discovery.

To truly appreciate the scale of this transformation, let's use an analogy. Previously, a scientist was like a lonely cartographer, meticulously, step by step, mapping uncharted lands onto parchment, relying only on their knowledge and intuition. Today, they are the captain of a high-tech research vessel, charting a course through stormy oceans of data. And their team is an ensemble of highly specialized AI assistants, each possessing superhuman abilities in their field.

In our journey today, we will trace this evolution, this "golden thread" that runs through all modern scientific laboratories. We will see how AI consistently masters three key roles, transforming from a simple assistant into a co-author of discoveries:

- AI-Lab Assistant: This is a tireless executor, capable of sifting through petabytes of data with inhumane speed and accuracy, performing routine but critically important work that would take humans decades. It searches for anomalies in medical images, catalogs genomes, and checks millions of chemical compounds.

- AI-Expert: This is no longer just an executor, but a smart colleague. It possesses deep knowledge in a narrow field, capable of "reasoning" about biochemical processes, planning complex multi-stage experiments, and proposing hypotheses based on analyzed data.

- AI-Theorist: The highest stage of evolution to date. This AI does not just analyze existing things but generates something fundamentally new. It acts as a creative engineer, creating new molecules, materials, or even entire synthetic biological systems according to specified parameters.

But to perform these roles, scientific LLMs had to master unique "superpowers" that distinguish them from their counterparts who write poetry or code. During our tour, we will evaluate each model precisely by this framework of three key abilities:

- Seeing the invisible: The ability to find subtle patterns hidden from the human eye in the chaos of big data. This could be a barely visible pattern on a gigapixel image of a cancerous tumor or a weak correlation in genomic sequences.

- Speaking the language of nature: The fundamental ability to understand and generate the code in which reality itself is written. These are the "languages" of DNA, proteins, chemical compounds, represented as SMILES or amino acid sequences.

- Designing the future: The most impressive power—the ability not just to analyze or predict, but to create new entities with predefined properties. This is inverse design in action.

So, buckle up. Our tour of the laboratories of the future, which are already working today, begins.

Deciphering the Code of Life: Biology and Genomics

Our first destination is the holy of holies of modern science: biology. It is here, in attempts to decipher the fundamental code on which life itself is written, that AI tools are making the most impressive breakthroughs. If you think about it, biology is the science of information. DNA is text, proteins are complex three-dimensional machines assembled from instructions in that text, and their interactions are the language through which cells communicate with each other. And today, we have the first translators capable of speaking this language.

AlphaFold 3 – Translator of Protein Dialogues

Let's start with a living legend. The breakthrough of AlphaFold 2 several years ago, which solved the half-century-old protein folding problem, was a classic example of an AI-Lab Assistant at work. It took an amino acid sequence and predicted a static 3D structure with incredible accuracy. It was brilliant, but it was just a snapshot of the tool. What if we need to understand how this tool works in conjunction with others?

This is where AlphaFold 3 comes into play, and it's no longer just a lab assistant, but a full-fledged AI-Expert. Its key superpower is "Speaking the language of nature," but now it has mastered not just the alphabet, but the grammar and syntax of protein interactions. The breakthrough of AlphaFold 3 is not in predicting the protein shape even more accurately, but in predicting its "social behavior": which other molecules—DNA, RNA, small molecule ligands (the basis of future drugs)—it will bind to and exactly how. This is the Holy Grail for drug development. It's a shift from a static 3D photograph to a "dance hall" simulator, where AI predicts who will invite whom to dance and how. And it does so with astonishing accuracy: compared to existing methods, the accuracy gain for interactions with other molecules is at least 50%.

Behind this leap is a fundamental architectural shift. Unlike its predecessor, AlphaFold 3 does not use the Evoformer module. Instead, it is built on a new architecture that includes a Pairformer module for processing pairwise representations and, most importantly, a diffusion model for generating the final 3D structure. The key insight here is that the new approach allowed reducing the dependence on multiple sequence alignments (MSA), which became the very key that opened up the possibility of modeling complex complexes. Although, of course, this model also has its blind spots—for example, difficulties in modeling disordered protein regions.

Evo – Reader of Genomic Epics

If protein interactions are dialogues, then the genome is a multi-volume epic. And here we face a problem of a different scale. Standard LLMs, built on the Transformer architecture, work perfectly with texts a few thousand words long. But genomic sequences can reach millions of "letters." For the classic attention mechanism, whose complexity grows quadratically with text length, this is like trying to read "War and Peace" while only remembering the last sentence. The model simply "suffocates."

And here comes Evo from Arc Institute—a unique AI-Lab Assistant, specifically designed to read genomic sagas. Its superpower is still the same, "Speaking the language of nature," but this time—in the language of DNA in its true, colossal scale. The secret to its resilience lies in its hybrid StripedHyena architecture, which cleverly combines convolutional layers with attention mechanisms. This allows it to scale almost linearly, rather than quadratically, and process sequences up to one million base pairs long. The analogy is obvious: if a typical AI reads the genome through a keyhole, then Evo is one who can take in the entire chapter at a glance.

Trained on a colossal dataset (over 9 trillion nucleotides), it is capable not just of generating synthetic systems. Its true power is revealed in something else: in predicting the functional consequences of mutations, especially in the "dark matter" of the genome—non-coding regions where many other models are powerless. This paves the way for understanding the deeper causes of genetic diseases.

Bioptimus – The First Step Towards a Universal Law

AlphaFold 3 and Evo are incredibly powerful, but highly specialized tools. What if we go even further? What if we create a model that understands not individual "languages," but the fundamental laws underlying all of biology? This is precisely the ambitious goal of the Bioptimus project.

And now, this "claim" is no longer just a declaration of intent. Bioptimus has made its first move, and it proved to be very powerful: they released H-optimus-1—the largest foundational model for pathology to date.

And here we see how their main trump card—access to unique data—was played to its full extent. H-optimus-1 is a Vision Transformer with 1.1 billion parameters, trained on an unprecedentedly diverse dataset: over a million histological slides from 800,000 patients across 4,000 clinical centers. It is this incredible breadth of data, covering over 50 organs and various scanner types, that allowed the model to learn deep and generalizable patterns.

The result? The model not only demonstrates state-of-the-art performance but also directly competes with giants like Prov-GigaPath, outperforming them on average across key benchmarks (e.g., HEST and a set of slide classification tasks).

This is the first, but incredibly important, step. By choosing pathology as a starting point, Bioptimus has shown that their dream of a "unified theory of biology" is not an abstract goal, but an engineering plan that has already begun to materialize. They have created not just another pathology model, but a foundation upon which, evidently, the next, even more complex systems will be built.

Digital Medicine: From Diagnosis to Drug

We have seen how AI learns to read the fundamental texts of life—genomes and protein structures. Now let's move from theoretical laboratories to clinical reality and see how these abilities are changing two cornerstones of medicine: diagnosis and drug creation. Here, AI systems take on roles that just yesterday seemed exclusively the domain of human expertise.

Prov-GigaPath, CHIEF, TANGLE – Eagle-Eyed Detectives

The first and perhaps most obvious application area is medical image analysis. Particularly in histopathology, where a doctor examines tissue sections under a microscope in search of signs of cancer. The problem here is twofold: first, the sheer volume of data is colossal. A single digitized slide is a gigapixel image, comparable in resolution to a map of a small city. Second, data from different hospitals vary greatly due to different equipment and staining protocols. This is the so-called "domain shift," the main enemy of reliable medical AI.

It is precisely to solve these problems that a whole galaxy of models has been created, which are the ideal embodiment of the role of an AI-Lab Assistant. Their main superpower is "Seeing the invisible."

Prov-GigaPath and CHIEF are masters of visual analysis. The first is a titan, trained on 1.3 billion images, capable of seeing global patterns on gigapixel slides. The second is a specialist in robustness, having overcome "domain shift" and proven its reliability on data from 24 different hospitals. They have perfected AI's ability to analyze what the eye sees.

And the TANGLE model takes the next, evolutionary step—from pure vision to multimodal understanding. It doesn't just look at a picture. Its key task is to match visual patterns on the slide with underlying gene expression data (transcriptomics). This is no longer just anomaly detection, but an attempt to understand its biological cause. It's a shift from asking "what do I see?" to "why do I see it?".

Together, they form a team of tireless lab assistants who can review every pixel on a city-sized image, do so with consistent accuracy across dozens of different clinics, and correlate what they see with a patient's genetic profile.

Tx-LLM and TxGemma: Evolution of the AI-Pharmacist

So, the diagnosis has been made. The next step is treatment. And here we see AI evolving from the role of a lab assistant to that of an AI-Expert. The process of creating a new drug is a long and arduous journey, consisting of dozens of stages: target identification, generation of candidate molecules, prediction of their properties, toxicity, and planning of clinical trials. Traditionally, each task used its own highly specialized software.

A breakthrough in this area was made by the Tx-LLM model from Google Research and DeepMind. This was a foundational system, fine-tuned from PaLM-2, which could be described as a universal machine for the entire drug development cycle, capable of solving 66 different tasks. The key discovery was the demonstration of knowledge transfer: by training the model on proteins, its performance with small molecules could be improved. This was a sign of a true expert being born.

But, as often happens in the world of AI, the revolution had not yet cooled before its next phase arrived. In March 2025, Google introduced TxGemma—an open-source successor, built on the new Gemma 2 architecture. And the results are impressive: the largest model in the TxGemma family surpassed or matched its predecessor's performance in 64 out of 66 tasks. This is not just an update—it's a paradigm shift. The move to open models means democratizing this powerful tool, allowing any research group to adapt and fine-tune it for their unique data and tasks.

Moreover, TxGemma brought with it two qualitative leaps that perfectly illustrate the evolution of the AI-Expert:

- Introduction of "Chat" version: In addition to models for predictions (

predict), conversational ones (chat) also appeared. This means that now a researcher can not only get an answer ("will this molecule be toxic?"), but also ask the next, most important question: "And why do you think so?". The model's ability to explain its logic, based on the molecule's structure, transforms it from an oracle into a full-fledged analytical colleague. - Integration into agentic systems: TxGemma is not the end point. Developers showed how it can become a "tool" in the hands of a more complex system—Agentic-Tx. This is no longer just a model, but a full-fledged AI agent based on Gemini 2.0 Pro, which can independently plan multi-step research, use 18 different tools (including searching PubMed and TxGemma itself), and answer complex questions. This is precisely the "conductor of the AI orchestra" we spoke of at the beginning, in action.

Chemical Intuition in Silicon

We have just seen how the TxGemma family of models creates a unified platform for the entire drug development cycle. But to truly succeed in this, AI must master not just biochemistry, but its fundamental basis—chemistry. This is a world with its own strict language, where instead of words there are molecules, and instead of grammar there are laws of chemical reactions. For decades, chemists have relied on their intuition—that difficult-to-formalize sense that suggests which reaction will proceed and which will not. Today, we see this intuition being born in silicon.

ChemLLM and GAMES – From Expert Knowledge to Creativity

The first and most important step is to teach AI to freely "Speak the language of nature," in this case—the language of chemistry. The most popular dialect here is SMILES—a way to represent a complex three-dimensional molecule as a simple text string. And here an interesting plot unfolds.

It would seem that giants like GPT-4, trained on the entire internet, should easily cope with such a task. But this is where the most interesting battle unfolds: the universal polymath against the narrow specialist. The ChemLLM model, fine-tuned on a huge corpus of chemical literature, challenges the titan on the key ChemBench benchmark. And the result is impressive. The specialized model wins in six out of nine categories. But the most important conclusion is made by the authors themselves: ChemLLM demonstrates performance comparable to GPT-4. At first glance, "comparable results" may not seem like a complete victory, but in fact, this is the main breakthrough. It proves that to achieve the highest level in a complex scientific field, you don't always need the budget of a technology giant. You need focused expertise and the right data. ChemLLM is a triumph of specialization.

But if ChemLLM is a brilliant expert who can stand on par with the best in the world in analyzing existing reactions, then GAMES takes the next, far more ambitious step. It moves from the role of AI-Expert to the role of AI-Theorist. Its task is not to analyze the past, but to "Design the future."

To understand the scale of the problem: the number of potentially possible, "drug-like" molecules is estimated at 10^60. This number is so astronomical that it is physically impossible to enumerate all variants. This is the "chemical space" where one needs not to find a needle in a haystack, but to learn how to create needles with desired properties on demand. And GAMES does exactly that. It does not search, but generates new, valid, and promising SMILES sequences, acting as a creative partner for the chemist. This is no longer just knowledge, it is creativity.

"Synthetic Trio" (SynAsk, Chemma, SynthLLM) – A Team of AI Chemists

Another approach to chemical automation is not to create one almighty model, but to bet on microspecialization. To see how this works in practice, let's mentally assemble our own "dream team" of three different, independently developed AI-Experts. It is important to emphasize: they are not part of a single project, but their functions so perfectly complement each other that together they serve as an excellent illustration of what a fully automated synthesis process could look like.

- SynAsk – The Strategist. They are shown a final, complex molecule that needs to be obtained. Their task is retrosynthesis. Like an experienced grandmaster, they plan the game in reverse, planning the entire sequence of reactions that will lead from simple and available reagents to the desired result.

- Chemma – The Tactician. They take one specific step from the plan proposed by SynAsk and predict its result with maximum accuracy. What will be the product yield? What side reactions are possible? Their narrow specialization in single-step prediction allows for the highest reliability at this critically important stage.

- SynthLLM – The Practical Lab Assistant. This is the one who turns a theoretical plan into a real recipe. They take the proposed reaction and select the ideal conditions for it: which catalyst to use, which solvent, what temperature to set. And they do this with astonishing efficiency. In one of the published cases, for a complex Suzuki-Miyaura cross-coupling reaction, SynthLLM proposed optimal conditions with an accuracy of over 85%, which allowed reducing the number of iterations in a real laboratory experiment by 60%.

Assembled together in this concept, they demonstrate the power of microspecialization. They automate the routine but intellectually demanding process of chemical synthesis, freeing up the scientist's time for truly creative tasks. We see how chemistry transforms from an art of intuition into precise engineering, where AI acts as both architect, foreman, and material supplier.

Beyond the Possible: Materials and Quanta

We have come a long way: from deciphering the code of life and diagnosing diseases to designing drugs at the atomic level. In each of these chapters, AI demonstrated increasingly complex capabilities. And now we have reached the culmination of our journey. The point where artificial intelligence finally stops being a tool for analyzing the past and becomes a generator of the future. We are entering territory where AI does not just help scientists, but offers them what they never dared to dream of—creating materials that have never existed in nature.

Argonne Models – Designers of Matter

Here, within the walls of Argonne National Laboratory, we see the highest manifestation of the AI-Theorist role. Its superpower—"Designing the future"—unfolds in all its glory. The task facing material scientists surpasses even drug discovery in its complexity. The very "chemical space" we mentioned, containing up to 10^60 possible compounds, is their workbench. The traditional approach is an endless cycle of synthesis and testing, a slow and agonizing trial-and-error process in the hope of stumbling upon something with the desired properties.

The foundational models being developed at Argonne in collaboration with the University of Michigan, turn this paradigm upside down. They embody the concept of "inverse design." This sounds like science fiction, but it is already working today. Instead of asking, "What properties does this new material have?", the scientist asks, "What material has the properties I need?".

Imagine this dialogue. You are not testing thousands of alloys to find one that is heat-resistant. You tell an AI, running on supercomputers like Aurora and Polaris: "Design me an alloy that withstands 2000°C, is as light as aluminum, and doesn't rust in salt water." And the model, trained on the properties of billions of known molecules, generates a list of completely new, previously nonexistent candidate compounds that are highly likely to possess precisely these characteristics. This is a fundamental shift from discovery to purposeful invention. AI doesn't just search in a giant library—it writes new books at the reader's request.

SECQAI QLLM – Looking Beyond the Horizon

If models from Argonne are the pinnacle of what is possible with classical computing today, what awaits us beyond the next horizon? To peer into that future, we need to turn to developments that seem almost speculative, but it is precisely these that define the direction of development for decades to come. We are talking about the fusion of the two most groundbreaking technologies of our time: artificial intelligence and quantum computing.

The SECQAI QLLM project is the first attempt to create a hybrid Quantum Large Language Model. This is a future that is currently in closed R&D, in "private beta." The key innovation that its creators are working on is a "quantum attention mechanism." The idea is to replace part of the classical transformer architecture with a quantum algorithm. Theoretically, this could yield an exponential increase in performance when processing complex interdependencies in data, which is the essence of the attention mechanism.

Of course, such claims should be met with healthy skepticism. Modern quantum computers are still too "noisy" and unstable to solve such problems on an industrial scale. But the attempt itself is important as a precedent. This is the first step towards an entirely new class of models that will be able to solve problems inaccessible even to the most powerful classical supercomputers. The development of their own quantum simulator and the integration of quantum components into LLMs is laying the foundation for a future revolution. If today's AIs learn to speak the language of nature, tomorrow's may begin to "think" in it, using the same quantum laws that govern matter itself.

So, our tour of the zoo of scientific AI models is complete. We have seen a kaleidoscope of technologies, from biologists to quantum physicists. To comprehend the scale of these changes and prepare for the main conversation, let's bring all that we have seen onto a single map.

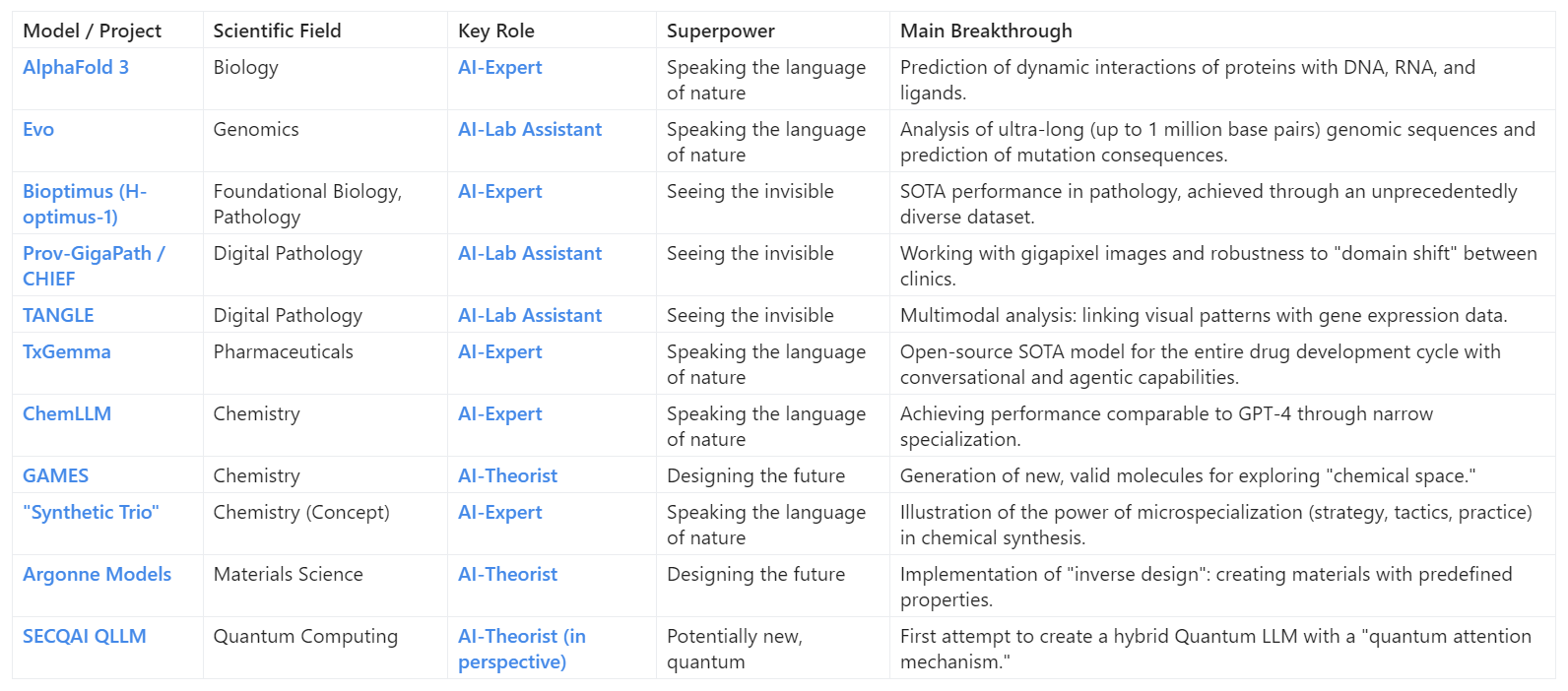

Map of Scientific AI Models

What does this change for the scientist and for all of us?

This map clearly demonstrates the scale and diversity of the new scientific revolution. But behind the kaleidoscope of exotic names—from AlphaFold 3, predicting the dance of proteins, to Argonne models, designing matter on demand—lies the main, most important question: "So what?" How does this tectonic shift change science, the profession of a scientist, and, ultimately, our lives? Let's figure it out.

1. How does the scientist's work change?

The most fundamental change occurs in the very essence of the profession. The focus of a researcher's work shifts from exhausting manual labor to higher-order tasks: asking the right, deep questions and critically verifying hypotheses generated by artificial intelligence. The role of the scientist evolves from an experimenter, spending hours at the lab bench, to a conductor of a complex AI orchestra. Their main task now is not to mix reagents in a test tube, but to formulate the ideal "prompt" for the AI system to direct its creative and analytical power in the right direction.

Furthermore, the AI-Lab Assistant solves one of the oldest and most painful problems in science—the reproducibility crisis. A human researcher can get tired, make a minor error in the protocol, or subconsciously interpret data in favor of their hypothesis. An AI system, in turn, will perform the same analysis a million times with absolute, mathematical precision. This ensures an unprecedented level of reliability and trustworthiness of results, laying a new, more solid foundation for scientific knowledge.

2. Which "unsolvable" problems are now solvable?

The new toolkit opens the way to solving problems that were previously considered unsolvable due to their combinatorial complexity.

- Combinatorial explosion in materials science and chemistry, where the number of possible compounds exceeds the number of atoms in the universe, is no longer an insurmountable barrier. Models like GAMES and Argonne systems allow not for searching for a needle in a haystack, but for purposefully generating "needles" with the properties we need.

- Truly personalized medicine ceases to be a beautiful concept and becomes an engineering task. Systems like TANGLE and Prov-GigaPath, capable of simultaneously analyzing histological images, genomic data, and a patient's clinical history, allow for the creation of therapeutic strategies tailored to the unique characteristics of a specific individual's body.

- The speed of drug development can change dramatically. A process that used to take decades and cost billions of dollars, thanks to platforms like TxGemma, has every chance of shrinking to a few years, and in perspective—even months.

3. The main barrier, new "gold," and new risks

However, this new world also creates a new scarcity. The bottleneck is not so much algorithms or computational power, but access to high-quality, well-structured, and multimodal data. The Bioptimus case proves this best: their strategic advantage is not a new transformer architecture, but exclusive access to proprietary clinical data that links different levels of biological information. Data becomes a new strategic resource, new "gold," for which corporations and scientific institutions are vying.

And, of course, a powerful new tool carries new, non-trivial risks. First, there's the problem of "hallucinations." In a scientific context, this is not just a amusing fabrication, but the generation of a plausible but false chemical reaction or protein structure, which can lead an entire research group down the wrong path, burning months of work and millions of dollars in funding. Second, there's the risk of systemic bias. A model trained on data where research on one ethnic group predominates or outdated scientific theories will not refute, but with incredible efficiency reinforce existing dogmas, hiding them deep within its "black box."

A New Era of Scientific Partnership

At the beginning of our journey, we compared the modern scientist to the captain of a research vessel. Now, having passed through the laboratories of biology, medicine, chemistry, and materials science, we can get to know their AI team better.

We have seen the tireless AI-Lab Assistant in the form of Prov-GigaPath, who tirelessly reviews millions of medical images, finding what the human eye might miss. We met the intelligent AI-Expert, such as TxGemma, who masters the full complexity of biochemical processes and is capable of replacing dozens of disparate tools in the drug creation process. And finally, we witnessed the birth of the creative AI-Theorist—the GAMES and Argonne models, which do not just analyze what exists, but create something new, designing molecules and materials of the future according to blueprints set by humans.

The evolution from Lab Assistant to Theorist is not just technological progress. It is the birth of a new type of scientific partnership. The scientist of the future is truly no longer a lone individual, making their way through the darkness of the unknown with a lantern in hand. They are the captain standing on the bridge, setting the strategic course, while their silicon team performs complex maneuvers in the stormy waters of combinatorial complexity, analyzes oceans of data, and even suggests new, uncharted routes.

This revolution has already happened, and it is not so much in silicon as it is in our minds. The question now is not whether AI can give us answers—it already does. The question is whether we will have the courage and imagination to ask it questions that once seemed to belong to science fiction. Because in this new partnership, it is the human who retains the right to dream.

Stay curious.