AI

LLM

News

The New Rules of the Game: What GPT-5, Genie 3, and Qwen-Image Reveal About the Future of AI

I don't often write news summaries, but the beginning of August 2025 was such that it would be wrong to remain silent. In just one week, the largest AI labs—OpenAI, Google DeepMind, and Alibaba—unveiled several releases that, in my opinion, deserve special attention. I've selected the most illustrative ones to analyze not only their technical essence but also how they reflect key industry trends. In this article, we'll look at OpenAI's long-awaited return to open-source and the launch of GPT-5, Google's breakthrough in world simulation with Genie 3, and Alibaba's elegant solution to the perennial problem of text on images.

OpenAI: A Double Whammy — GPT-OSS and the Long-Awaited GPT-5

Of all the players who took the stage during this period, most attention, of course, was fixed on OpenAI. And they didn't disappoint, delivering that very "double whammy" that simultaneously brought them back into the big open-source game and set a new standard for commercial models. Let's break down both moves in order.

ClosedAI is now Open (August 5)

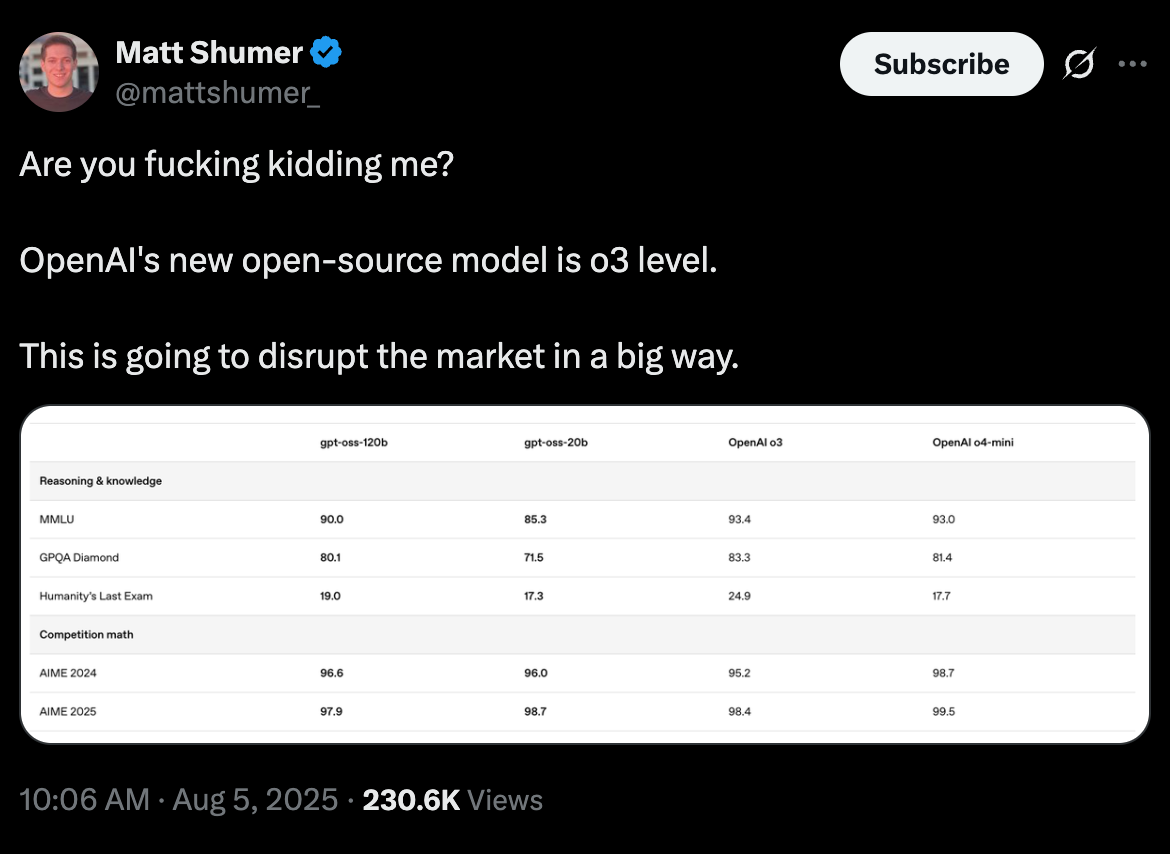

The first move was an event the open-source community had been waiting for for years—OpenAI released its first open-weight models in a long time. The release of gpt-oss-120b and gpt-oss-20b became a true sensation. These are o4-mini class models, designed for local execution: the larger version fits on a single GPU with 80 GB of VRAM, while the smaller one runs on a machine with 16 GB of RAM.

Technically, the models proved to be extremely interesting. Judging by leaked configuration files and community analysis, it's a Mixture-of-Experts (MoE) architecture with solutions like attention sinks and MXFP4 quantization. The 120B model actually has 117B parameters, of which only 5.1B are active at any given time, which ensures impressive speed.

Community reaction was predictably turbulent. On one hand—excitement. Enthusiasts jubilantly welcomed OpenAI's return to the "open" camp, and the ecosystem reacted instantly: integrations into vLLM, Ollama, and Hugging Face appeared literally within 24 hours. But the honeymoon period didn't last long. Soon, criticism poured in: the models turned out to be so "censored" ("safetymaxxed") that the community immediately dubbed them "GPT-ASS". Users noted an incredibly high rate of refusals even for completely harmless queries. To top it all off, serious concerns arose that the model, even when running locally, was "calling home"—accessing openaipublic.blob.core.windows.net upon launch, which sparked a wave of outrage and undermined trust in the release's "openness."

GPT-5 Launch — Evolution, Not Revolution (August 7)

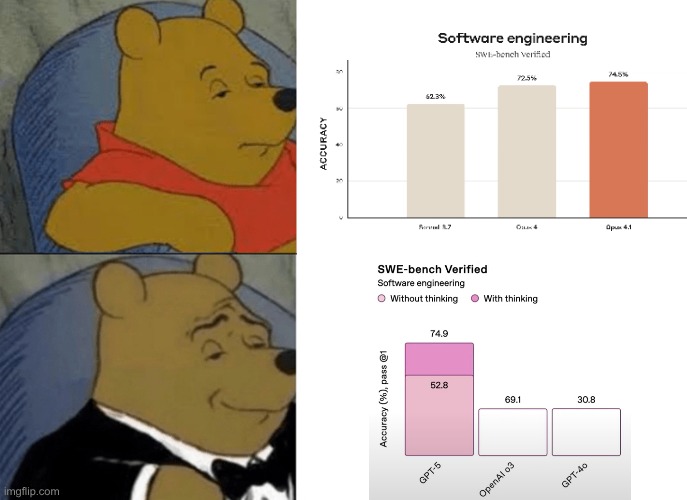

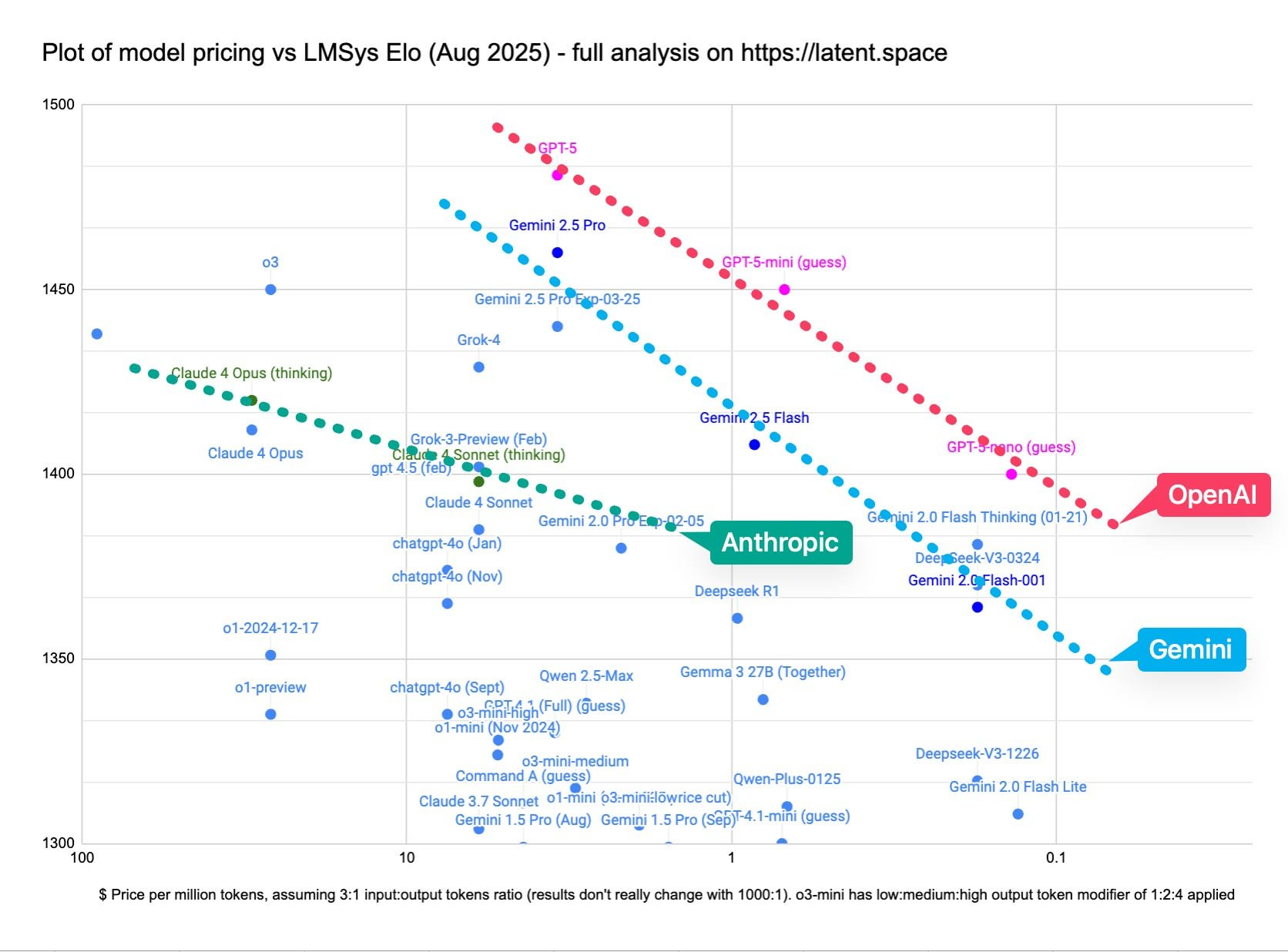

If the GPT-OSS release was a sort of aperitif, the main course was, undoubtedly, the launch of GPT-5, which took place just two days later. And here, the key insight is that GPT-5 is not a monolithic titan but rather an intelligent router. It's a "unified system" that decides in real-time which model to use to process a request: a fast "main" one or a deeper, slower "thinking" model. Alongside the flagship, smaller versions mini and nano were also announced.

However, the launch was not without controversy. The main meme became "chart crimes"—graphs from the presentation with incorrectly scaled axes, where 52.8% appeared higher than 69.1%. This unleashed a barrage of criticism and ridicule, seriously undermining trust in the presented benchmarks.

Regarding performance, the community agreed: it's an evolution, not a revolution. The gains proved to be incremental. The model is indeed strong in long-context tasks and, judging by initial feedback, hallucinates significantly less often. However, on some demanding benchmarks, such as ARC-AGI-2, it was outperformed by Grok-4 (9.9% versus 15.9%). Discussions about reaching a "plateau" in simple LLM scaling became increasingly common.

The real surprise was the pricing. OpenAI made GPT-5 cheaper than Claude Sonnet, with comparable or even superior performance. This is an aggressive move that could significantly reshape the market. The broad day-one integration with tools like Cursor, Perplexity, and Notion was also positively received. Perhaps the main trend set by this release is the shift in focus from choosing a specific model to managing "reasoning effort," which could become a new paradigm in AI application development.

Google DeepMind: From Mathematics to World Simulation

While Western giants competed in the realms of logical reasoning and world simulation, Google DeepMind chose to simply demonstrate pure, fundamental science. Their announcements weren't about market share but about expanding the boundaries of what's possible. They showcased two completely different, yet equally impressive breakthroughs: one in the area of abstract reasoning, the other in reality simulation.

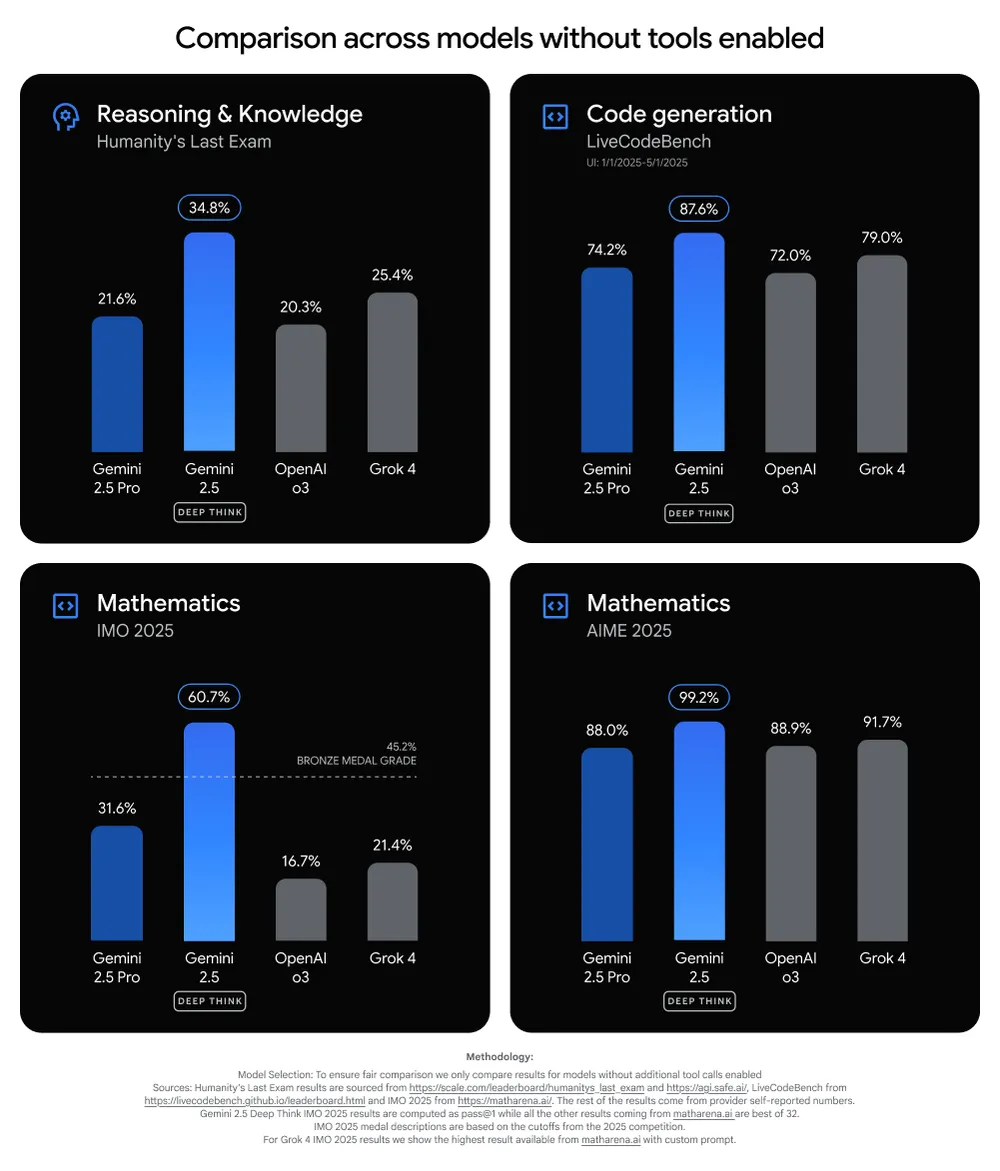

Gemini 2.5 Deep Think (August 1)

First to take the stage was Gemini 2.5 Deep Think—a model born from developments that earned Google gold at the International Mathematical Olympiad (IMO). This isn't just another update, but a demonstration of a new approach to problem-solving. The key idea is "parallel thinking." Instead of following a single path of reasoning (Chain of Thought), the model simultaneously explores multiple hypotheses, unfurling a fan of possible solutions, as it were, to choose the most optimal one. This allows it to tackle complex creative and logical tasks on an entirely different level.

Judging by the discussions, the community was genuinely impressed by the SOTA (state-of-the-art) results on the most demanding benchmarks. It seemed like a new heavyweight champion had arrived. But then Google announced the access model, and excitement turned to anger. The model became available by Google AI Ultra subscription for $250 per month with a humiliating limit of 10 queries per day. The community immediately dubbed this "daylight robbery" and a "scam." Thus, the most powerful reasoning tool at the time became virtually inaccessible to researchers and enthusiasts, remaining an expensive toy for the elite.

Genie 3 (August 5)

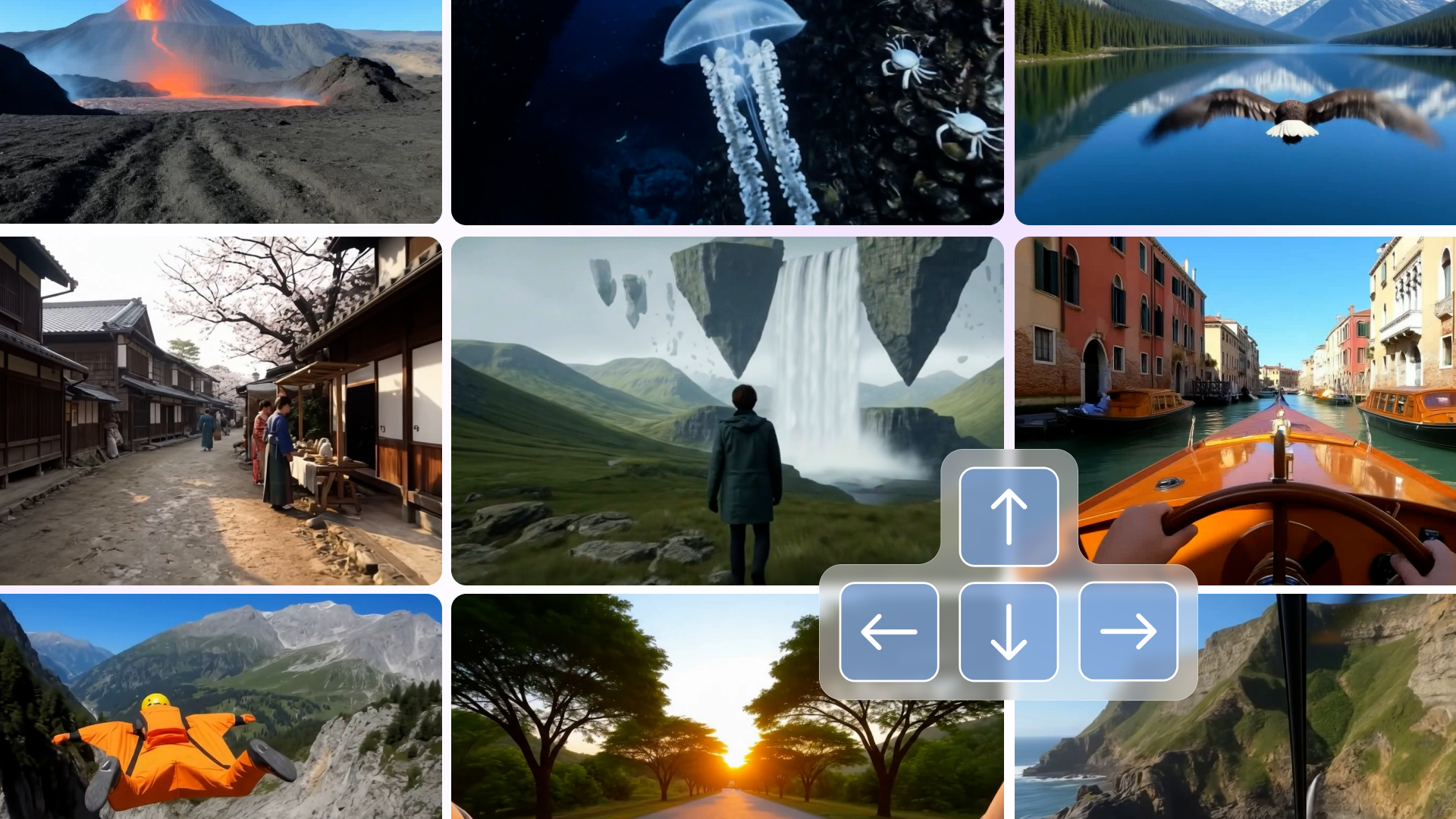

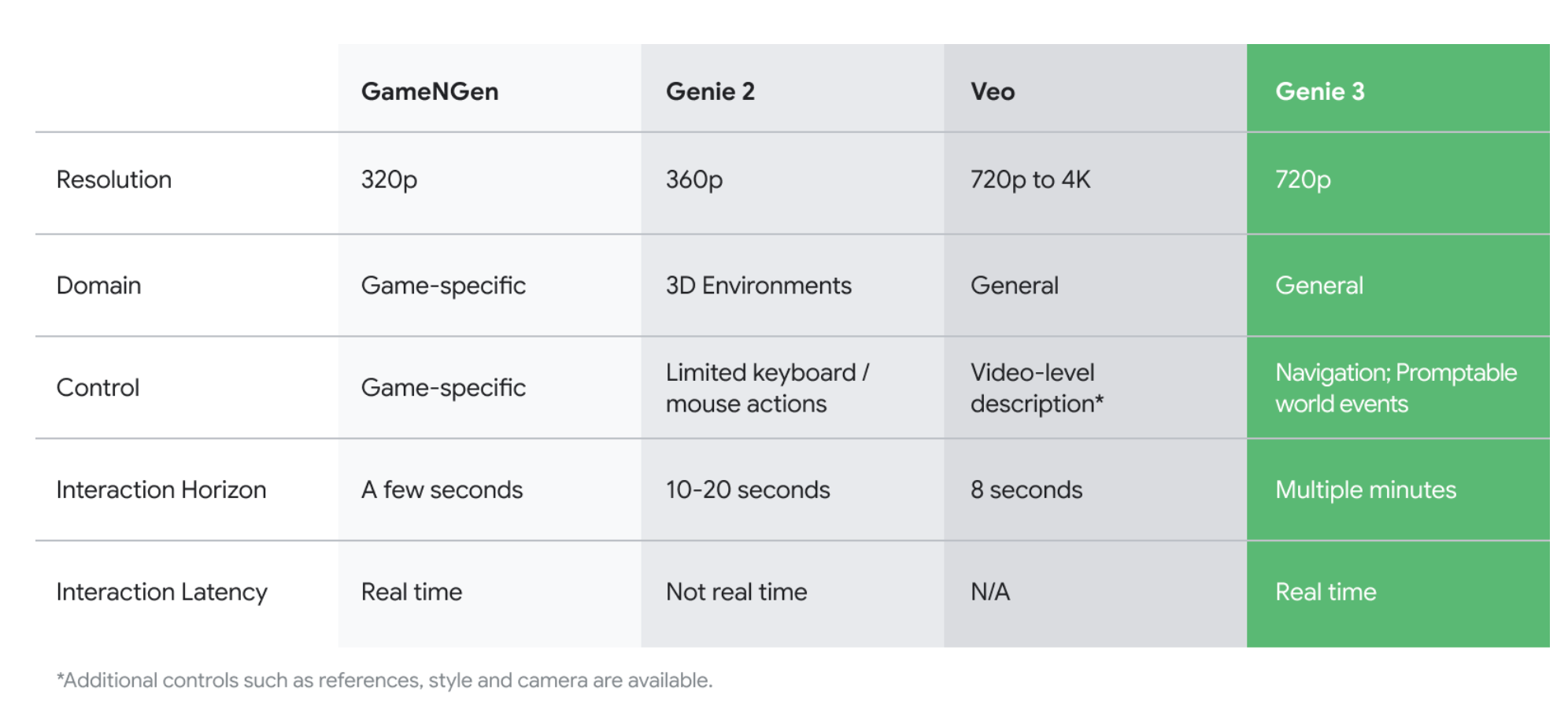

If Deep Think was a demonstration of the machine's "inner world" power, then Genie 3, announced on August 5, became a demonstration of its ability to create external worlds. DeepMind introduced a groundbreaking "world model" capable of generating interactive, playable 2D simulations in real-time from a text or graphical prompt. And this isn't just video generation—it's the creation of a living environment that renders at 24 frames per second at 720p resolution.

A key achievement was the so-called "world memory." This technology ensures environmental consistency for several minutes, solving the main problem of generative video models, where the world "falls apart" within seconds. The progress compared to the previous version, Genie 2, was described by the community with one word—"insane."

The excitement was immense. Genie 3 was immediately dubbed "game engine 2.0," with the potential to disrupt the industry of giants like Unreal and Unity. Active discussions began about its potential for VR, metaverses, and procedural content generation. But, perhaps, what impressed people most was one simple detail from the demonstration: the ability to "look down and see one's feet" within the simulation. This small touch, better than any benchmark, showed how deep the model's understanding of 3D space and its place within it had become. This is no longer just image generation; it's a simulation of being.

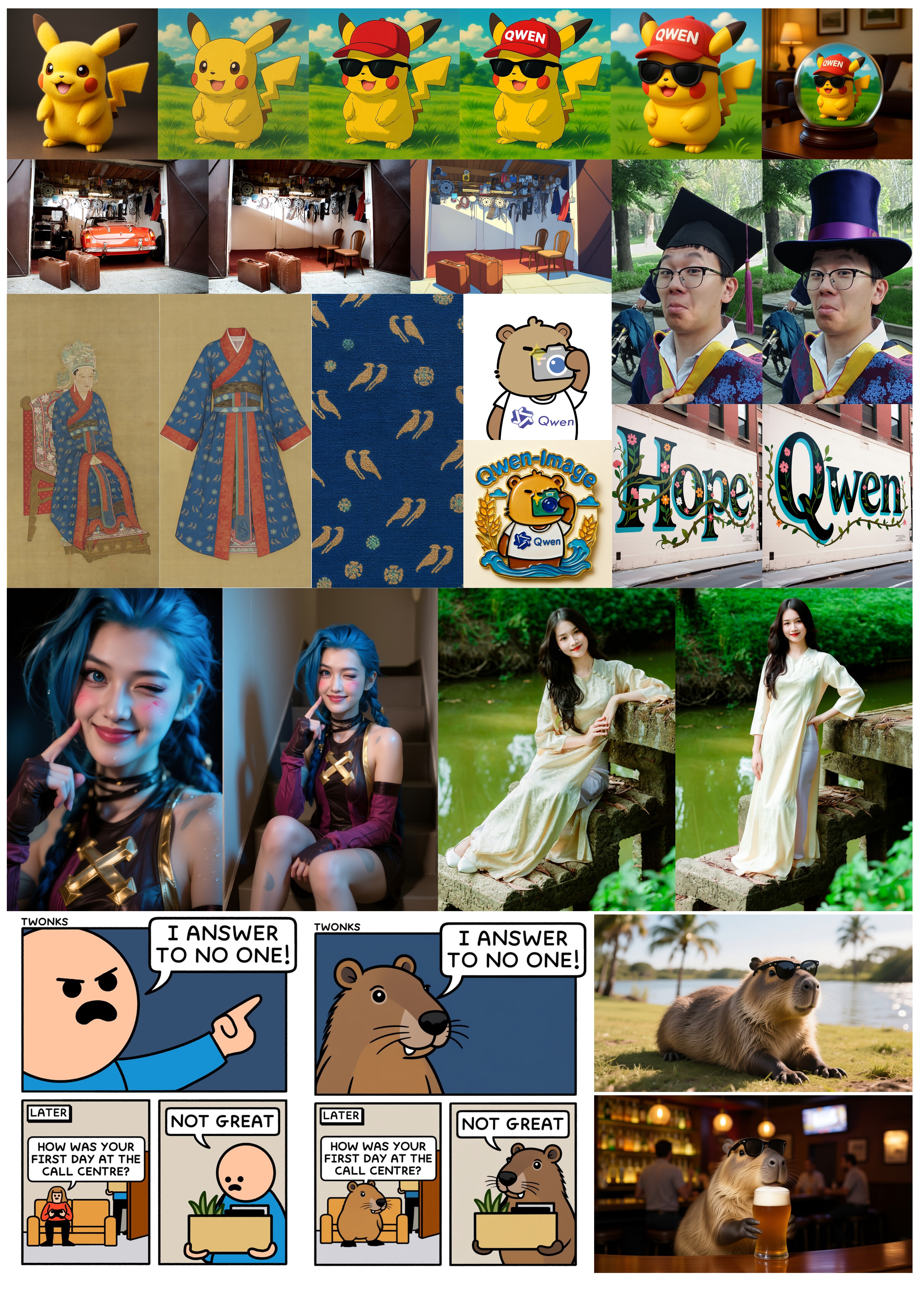

Alibaba Qwen-Image: A Revolution in Text Generation on Images (August 4)

While Western giants competed in the areas of logical reasoning and world simulation, a player stepped out from the wings who quietly and elegantly solved one of generative AI's most frustrating and long-standing problems. Historically, diffusion models, masters of textures and forms, were utterly inept at typography. Any attempt to make them render legible text would turn into a nightmare of unreadable squiggles, resembling alien script. This was such a fundamental problem that many had simply resigned themselves to it. And then, on August 4, Alibaba showed that it was too early to give up hope.

They released Qwen-Image—a 20-billion parameter multimodal model with an MMDiT architecture and, most importantly, with open weights. And it simply stunned the community. The main achievement was its impeccable, state-of-the-art text rendering on images, especially in Chinese and English. The model doesn't just "paste" letters; it organically integrates them into the scene, accounting for perspective, lighting, and style. In addition, Qwen-Image demonstrated impressive image editing capabilities that many users compared to the level of GPT-4o.

The community's reaction was close to shock. Judging by hundreds of comments, people were particularly amazed by the quality of text rendering. This was the "holy grail" they had sought for so long. One commentator shrewdly remarked: "We were so focused on Western models that we missed how the East solved a problem we considered unsolvable."

But it wasn't just the quality that impressed. Alibaba accompanied the release with a highly detailed 46-page technical report. Against the backdrop of typical marketing posts from Western labs, which obscure all details behind the phrase "for security reasons," this level of openness was perceived as a breath of fresh air. It was not just a release but a full-fledged contribution to the scientific community, demonstrating the team's confidence and maturity.

However, this revolution comes with a price, and its name is VRAM. To run the model in FP16 format requires 40 to 44 GB of VRAM, making it inaccessible to most enthusiasts with consumer graphics cards. But the community wouldn't be itself if it didn't rise to the challenge. Work immediately began on quantized GGUF versions to "squeeze" Qwen-Image's power into more accessible hardware. Enthusiasts quickly integrated the model into popular tools such as ComfyUI. This release showed that Chinese models aren't just catching up—in some critically important and visually impactful niches, they are already pulling ahead, setting new standards for the entire industry.

New Power Dynamics and Key Trends

What's the bottom line? In my opinion, from all these events, three main takeaways can be identified that will define the industry's development in the near future.

1. "Openness" is a new strategy, not an ideology. The GPT-OSS release showed that even the most closed players are now forced to use open-source to compete for the minds and loyalty of developers.

2. The focus is shifting from "talking heads" to "digital actors." Models are increasingly becoming not the end product, but the core processor for systems that interact with the real world (agents) or simulations (like Genie 3).

3. Progress no longer equals "pure" scaling. The era when one could simply "add zeros" and achieve a breakthrough seems to be coming to an end. The reaction to GPT-5 proves that now the advantage is shifting towards architecture (MoE, routers) and data quality.

We are witnessing the race for AGI transition from a simple arm wrestle into a complex game of chess, where victory goes not to those with the most "hardware" but to those who can see several moves ahead.